Confidential Computing is the protection of data in use by performing computation in a hardware-based Trusted Execution Environment.

Backed by industry giants such as Microsoft, Google and Intel, the Confidential Computing Consortium aims to address data in use, enabling encrypted data to be processed in memory without exposing it to the rest of the system, reducing exposure to sensitive data and providing greater control and transparency for users.

In this blog we will discuss the challenges and benefits of using the TensorFlow (TF) library to deploy machine learning (ML) models on the Fortanix Confidential Computing Platform™, followed by simple step-by-step instructions to get you started.

The problem of protecting data-in-use is multi-faceted for ML models where private data is used to train a model, models provide inferential inputs/outputs within a larger workflow, and the model algorithm can be confidential in its own right.

This problem gets further amplified when multiple data providers, who don’t trust each other, provide confidential data to a model that is written by a ML model provider who is interested in protecting the intellectual property of the ML model from the data providers.

With Fortanix Runtime Encryption Technology (RTE), applications can train ML models inside secure enclaves on-premises or in public cloud. The confidential data and/or application code for the model never leaves the organizational trust boundary.

Our technology enables secure connection (SSL) communications that terminate inside a secure enclave running the ML model and verify the identity of an enclave running an ML model remotely.

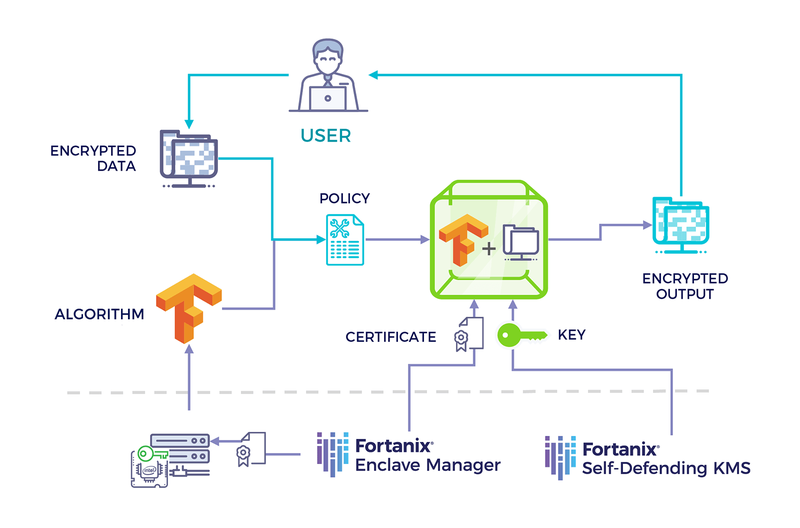

Fortanix simplifies the complex remote attestation process via Public Key Infrastructure (PKI). As part of the Fortanix Confidential Computing Platform, Fortanix Confidential Computing Manager ™ can issue identity certificates based on attestation reports of the enclaves that are running the ML algorithm.

An organization can use existing PKI tools to imbed secure enclave technology and extend the trust boundary on remote infrastructure including public clouds.

It is very easy for a model provider to secure their intellectual property by publishing the algorithm in a secure enclave using the Fortanix Confidential Computing Platform.

The ML provider can continue to use popular frameworks such as TensorFlow and when they are ready to publish a model, they can simply convert the model for deployment within a secured enclave model using the Fortanix Confidential Computing Manager service.

An important aspect of this seamless conversion process is that there is no modification required to the original application, enabling native models developed using common scripting languages such as Python to be implemented securely, without additional effort.

A data provider can verify the code identity of the model and allow access to the data, as per internal policies based on simple rules using the certificate issued by the Fortanix Confidential Computing Manager.

Furthermore, data input/output from the model can be encrypted and distributed with a simple policy using the Fortanix DSM.

For example, the output of a sensitive computation on a dataset can be subjected to a quorum approval by an organization’s departmental authority before it is accepted into downstream workflows. A typical workflow is illustrated below:

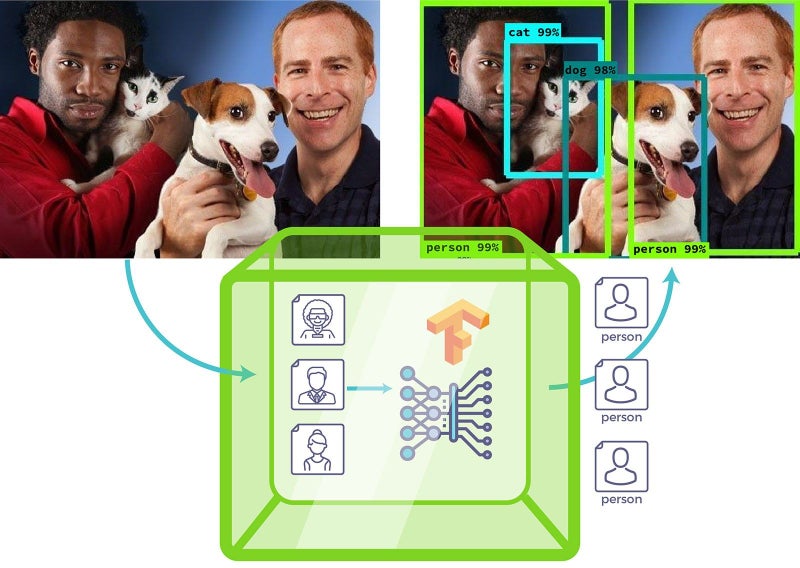

The diagram above provides an example of running a standard TF object detection model using the Fortanix Confidential Computing Platform.

The input images are sent encrypted to the model running in an enclave, the output is a list of anonymized objects in the image.

This model can be used to protect personal identity (face), gender or racial identity in input images and yet it conveys meaningful information about input images for statistical analysis.

This example demonstrates how the privacy concerns related to data analytics can be addressed, including the ability to audit the workflow to achieve regulatory compliance for both the data provider and model owner.

Where do you begin with the Fortanix Confidential Computing Platform? Follow the steps in our developer section to run TensorFlow models inside secure enclaves. Review the example for implementation details.

3910 Freedom Circle, Suite 104,

3910 Freedom Circle, Suite 104,