You need a huge dataset to effectively train a neural network and bring out an accurate model. But when you cannot afford so many variants of data say like faces or signatures of a person, and your use case demands a highly accurate model, what is the solution? Well, it is Siamese neural network!

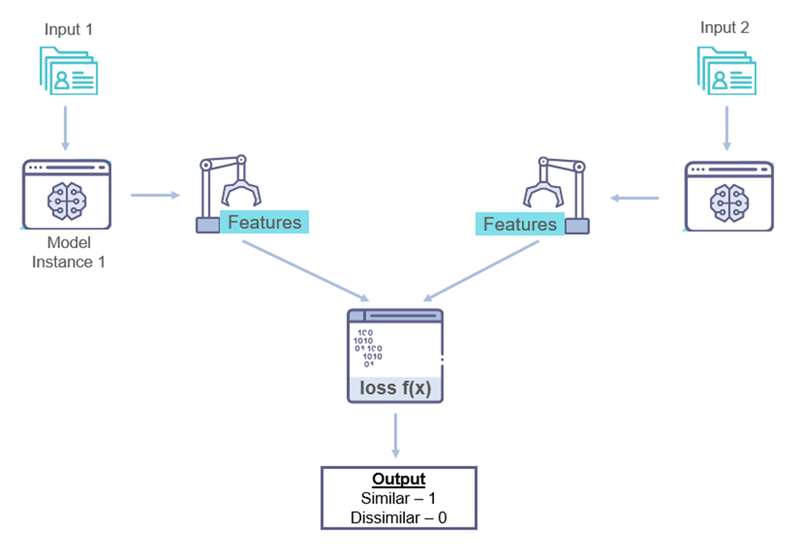

Siamese Neural Network belongs to the class of neural network architecture that includes more than one identical subnetwork to triage inputs based on similarity.

Can you relate the terms “twins”, “identical”, “similarity”? Yes, just like twin humans or identical objects, these terms indicate the similarity in the configuration of subnetworks with identical parameters and weights.

Siamese networks fit well when we cannot generate much data but need to find the similarity in the inputs by comparing their feature vectors.

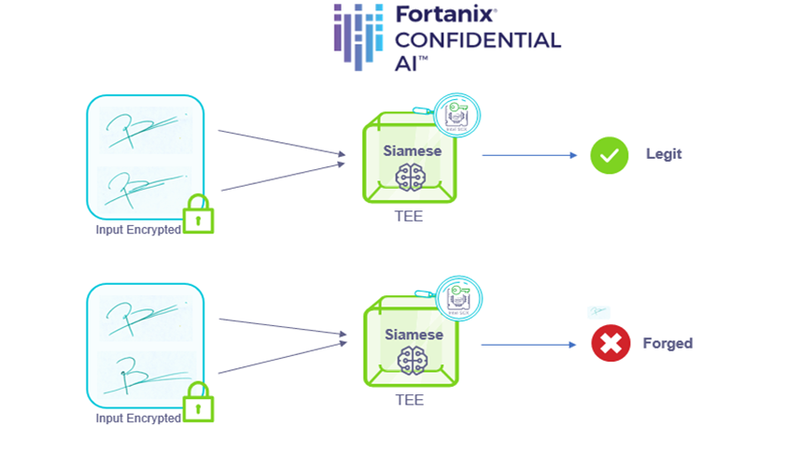

It is important to note Siamese network is widely adopted today by institutions for signature verification, facing recognition, and such tasks which involve highly sensitive and private information and are subjected to regulations.

While Siamese can rescue us from the challenge of data availability, Confidential Computing can protect these sensitive data and integrity to our application and help with compliance.

Let us understand more about the architecture of the Siamese network and what it means to train and run the network with Fortanix Confidential Computing technology.

A few images per class is sufficient to learn the similarity function, and train to see if the two input images are similar or not. And for the network to learn we use a triplet loss function where a baseline input image is compared to the vectors of similar input and a dissimilar input image.

The distance between a similar image (positive) and the baseline image (anchor) is smaller than the distance between the dissimilar (negative) and baseline inputs. You can find more on the triplet loss in the FaceNet paper by Schroff.

Protecting the input data

Encrypting the private and sensitive input data at rest and in transit is necessary but it does not protect the data from runtime breaches. Fortanix Confidential Computing technology protects you from this data insecurity as you migrate your analytical workloads to the public cloud.

Fortanix allows your data to be encrypted through its life cycle as it opens to the application inside enclaves. These enclaves are secure regions of memory on the machines that protect your data in use from various attacks including malicious insiders, root or any credential compromise, and network intruders.

In our example above, the input signatures are encrypted using an AES256 secret from the Data security manager. This encrypted input images become input to the Siamese-based Signature verification application hosted in the trusted execution environment of a public cloud.

Deploying Siamese network with MNSIT dataset in secure enclaves:

Conversion of application:

Your current application and model can be enabled to run inside a secure enclave without rewriting them. Fortanix Confidential Computing Manager (CCM) provides a service to convert your containerized application with a few clicks.

CCM allows us to manage the lifecycle of enclaves from cloud infrastructure and orchestrate the workflow of datasets and our analytics application all in a single pane of management. You can find more information on the conversion process in the user guide.

CCM allows to manage the remote attestation of the hardware, ensures the code or application being run is not tampered with, and provides geofencing capabilities to be compliant with regulations.

The TLS certificate issued to the running enclave application may be used to authenticate to any third-party remote applications, providing the most trusted way for applications to communicate.

Training the model:

The learning process of the Siamese network involved initialization of the network, loss function, and passing the image pairs through the network.

input = Input(shape = input_shape) x = Conv2D(4, (5,5), activation = 'tanh')(input) x = AveragePooling2D(pool_size = (2,2))(x) x = Conv2D(16, (5,5), activation = 'tanh')(x) x = AveragePooling2D(pool_size = (2,2))(x) x = Flatten()(x) x = Dense(10, activation = 'tanh')(x) model = Model(input, x) model.summary()

The model was trained with a batch size of 128 and 11 epochs and exported to an encrypted directory. For both the learning and inference processes, an Azure DCS8_v3 VM with 3rd Generation Intel® Xeon Processor enabled with Intel Software Guard Extension was used.

model.compile(loss=contrastive_loss, optimizer='rmsprop', metrics=[accuracy])

model.fit([tr_pairs[:, 0], tr_pairs[:, 1]], tr_y,

batch_size=128,

epochs=epochs,

validation_data=([te_pairs[:, 0], te_pairs[:, 1]], te_y))

with tf.keras.utils.custom_object_scope(custom_objects):

model = keras.models.clone_model(model)

model.save("/opt/fortanix/encrypted_dir/siamese.h5")

Inference:

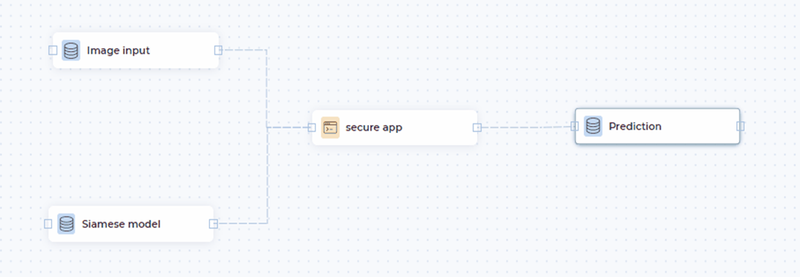

The saved model can be part of the containerized application or can also be securely made available to the application run time using CCM workflows. In the case of the latter, an encrypted model can be pushed to the enclave application along with the input images.

CCM workflow allows to deploy complex designs on confidential architectures with easy drag and drop options as seen below. The resulting prediction can be pushed to the desired location.

CCM workflow ensures the input data is available to the application only after successful attestation and verification of code integrity. Understand the high-level flow of CCM usage in our knowledge base.

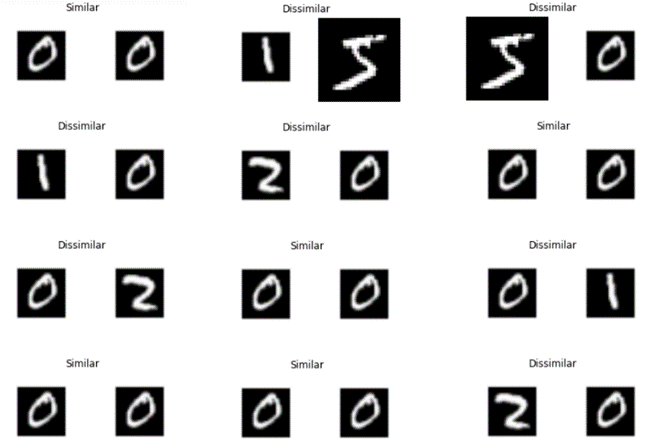

The secure app running in enclaves pulls the trained model siamese.h5 and MNIST test dataset sample which are handwritten digits between 0 and 9, both in encrypted forms. The similarity between the digits is based on the distance the network sees between the images.

The distance between the test sample and another image of the same class is less and the distance between the test image and an image of another class is more, and this determines the prediction “Similar” or “Dissimilar”.

pairs = np.random.choice(testImagePaths, size=(10, 2)) model = tensorflow.keras.models.load_model(ENC_MODEL_PATH + "my_model_new.h5") preds = model.predict([enc_digit_A, enc_digit_B]) prob = preds[0][0]

Along with securing the proprietary model and private data, it is necessary to understand the other important offerings of Fortanix confidential computing to reach AI’s full potential:

- Control the sourcing and flow of data with geofencing capabilities, by adopting policies based on the location of data.

- Encrypt, tokenize data, manage the secrets with Data Security Manager. Only verified applications in a trusted environment can access the secrets.

- Verify the integrity of the AI model and execution environment. Only the approved and attested user applications will be issued TLS certificates.

- Preserve private information even during runtime with confidential computing and allow access to relevant datasets for research and quality model development.

- Activity logs at every step and attestation reports complement audits. The proof of execution allows you to meet the most stringent regulations.

We’re fully geared up to serve customers and partners with Confidential Computing solutions, today. Prefer speaking to one of our experts to understand how we can help your business? Click here.