With the introduction of AI technologies, the healthcare sector has undergone a major transformation. AI is a key part of making diagnoses more accurate, better treatment plans, and changing the way people are cared for. But putting AI to use in healthcare raises important security issues that need to be dealt with.

The goal of this blog is to go into detail about AI security in healthcare, with a focus on the problems of data privacy and integrity. We will also talk about ways to deal with the problem, look at real-world examples, and talk about future trends and make suggestions.

As we figure out how to use AI to change the way we care for patients and make medical diagnoses, we face a big problem: how to keep data safe and private?

Understanding the Unique Security Challenges of Artificial Intelligence in Healthcare:

Privacy and Data Protection:

Healthcare data is very sensitive and includes personal and Healthcare information. Protecting patient privacy and complying with data protection regulations such as the Health Insurance Portability and Accountability Act (HIPAA) and the General Data Protection Regulation (GDPR) are of paramount importance. Potential risks of unauthorized access, data breaches and violations of patient privacy require strict security measures.

Additionally, the value of medical data on the black market makes it an attractive target for cybercriminals.

Data integrity and reliability:

Ensuring the accuracy and reliability of AI-powered healthcare systems is critical to patient safety. Data integrity threats such as adversarial attacks and data poisoning pose serious problems. Adversary attacks intentionally manipulate input data to mislead AI models, while data poisoning involves injecting malicious data into training datasets to degrade model performance.

Data sharing concerns:

In a world where data is the new gold, privacy concerns and barriers to data sharing have risen to the level of formidable villains, limiting collaboration and slowing innovation in the field of data-driven research. It's a fight between protecting sensitive data and unleashing the power of collective intelligence. Collaboration exhibits the spirit of innovation and stands at the gates of growth, eager to bring people together and combine data to make groundbreaking discoveries.

Regulatory and Ethical Considerations:

-

Privacy Compliance:

Important steps within Privacy, include putting in place data governance frameworks, doing regular risk assessments, and setting up strong privacy policies and processes. Some ways to make sure compliance are to do regular audits, use encryption and "pseudonymization" methods, and teach healthcare workers about the best ways to protect data privacy. Working with legal and regulatory experts can help organisations keep up with new rules and change their AI systems to fit the new rules.

-

Ethical implications of AI in healthcare:

AI has a lot of promise, but it also raises ethical questions. Fairness, transparency, and removing bias from AI algorithms are important things to think about to avoid discrimination or differences in healthcare results that weren't meant to happen.

Organisations should take steps to find and fix bias in AI models, encourage diversity in training datasets, and set clear rules for how to handle ethics problems. When using healthcare data for AI applications, it is important to respect the patient's right to privacy and get their informed permission. Following ethical rules and frameworks, like those from professional medical associations and AI ethics panels, helps make sure that AI is developed and used in a responsible way.

Mitigating Security Risks in AI Healthcare Systems:

Secure Data Management:

For healthcare AI systems to be safe, they need to have good ways of managing data. Data at rest and in transit should be protected using encryption methods like strong encryption algorithms and secure key management. Strong access measures, like role-based access and multifactor authentication, help stop unauthorised access to sensitive healthcare data.

Also, secure data sharing and collaboration protocols make it possible for healthcare workers to work together without putting patient information at risk. Techniques like secure federated learning enable the training of AI models on decentralised data sources without the need for data centralization and with lower privacy risks.

Robust Model Protection:

It's important to protect AI models from unauthorised access, changes, or use. Organizations must use secure development and deployment methods, such as secure coding, regular security audits, and constant monitoring. Model explainability and interpretability methods help to comprehend the decisions made by AI models, increasing transparency and making it possible to spot potential biases or vulnerabilities.

Techniques like adversarial training and strong optimisation can be used to stop adversarial attacks. Adversarial training adds adversarial examples to training data to make models more resilient, while robust optimisation works on minimising the worst-case performance of models.

Privacy Enhancing Technologies:

It is important to protect the privacy of patients while using the power of AI.

Secure multiparty analytics (sMPA): Using technologies like Confidential Computing is another way to protect privacy that lets multiple parties analyse data together without revealing the raw data. It does this by using cryptographic methods to perform computations in a secure way using hardware-based Trusted Execution Environment (TEE) or secure enclaves.

With federated learning, AI models can be trained at different healthcare institutions without having to share real patient data. This model of collaborative learning lets organizations gain from the knowledge of many people while keeping their data private.

Case Studies & Real-Life Examples:

When you look at how safe AI has been used successfully in healthcare, you can learn a lot. We profile organizations that have unlocked the potential of safer AI in healthcare. Their success stories become our blockbuster films, proving that with the right strategies and the right technology, we can overcome any security challenge. From diagnosing diseases to improving patient care, these case studies will amaze you as if you witnessed the Avengers saving the world.

- BeeKeeperAI™ uses privacy-preserving analytics on multi-institutional sources of protected data in a confidential computing environment including end-to-end encryption, secure computing enclaves, and Intel’s latest SGX enabled processors to comprehensively protect the data and the algorithm IP.

- DHEX, a vertical for Zuellig Pharma Analytics, is a regional healthcare data movement with a mission to spearhead data democratization and insight innovation. This movement consists of an ecosystem powered by value partnerships with data partners and data consumers coming together to harness the value of data.

How Fortanix is helping securing AI in Healthcare?

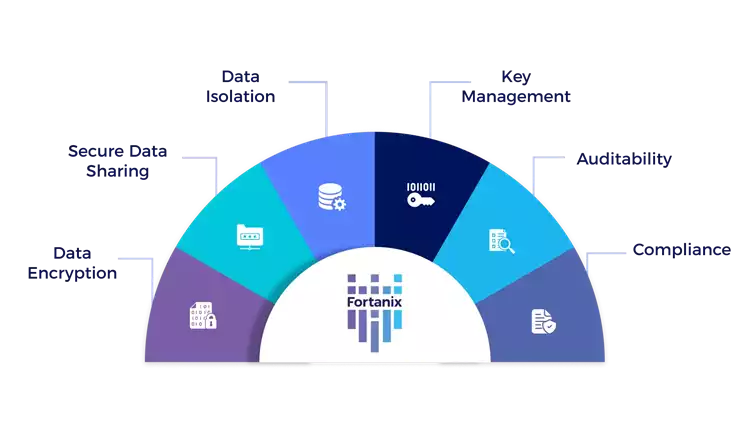

Fortanix provides a platform for confidential computing, which helps secure AI in healthcare. Fortanix's platform has a number of features that make it perfect for healthcare applications. Fortanix CCM & Fortanix DSM can help in the above use cases by giving healthcare organizations data security and key management. Some of these things are:

- Data encryption: Fortanix DSM can secure data both at rest and in motion, making it harder for unauthorized users to get to it.

- Secure data sharing: Fortanix CCM makes it easier for multiple parties to share their data without thinking about their IP information being lost.

- Data isolation: Unauthorised users cannot access the data that is being processed in the confidential computing environment because it is separated from the rest of the system.

- Key management: Fortanix DSM can handle cryptographic keys to make sure they are secure and that only authorized users can access them.

- Auditability: The platform keeps a full audit trail of all activity in the confidential computing environment, so any unauthorized entry can be found.

- Compliance: The platform meets several industry standards, such as HIPAA and GDPR.

Conclusion:

The privacy of patients, the reliability of data, and the confidence people have in AI-driven healthcare systems all depend on how well they are protected. Healthcare organisations can use AI to its fullest potential while protecting data privacy and integrity by recognising the particular security challenges, putting in place strong security measures, adhering to regulations, and handling ethical issues.

Innovative companies like Zuellig Pharma and their DHEX technology, as well as BeeKeeper AI, work together to make AI solutions in healthcare safer. In the future, AI security in healthcare will be shaped by new technologies and following best practises. This will allow organisations to provide AI-driven healthcare services that are safe and efficient.

In the future, AI security in healthcare will be shaped by new technologies and following best practises. This will allow organisations to provide safe and effective AI-driven healthcare services.