DevOps is fast becoming the standard approach for building software and speeding up application delivery. The recent Oracle-KPMG Threat report 2020 highlights the broad adoption of DevOps across a wide spectrum of enterprises, with nearly two-thirds of respondents already employing DevOps or planning to do so over the next 12-24 months. However, it also entails increased security risks.

Increased proliferation of threats and tools within the DevOps lifecycle

As the companies start using the DevOps model, more privileged accounts, and sensitive information in the form of encryption keys, container secrets, passwords, service account details etc. need to be created and shared. These secrets are often easy targets for cybercriminals.

As per a recent report by SANS, 46% of IT professionals have faced security risks during the initial phases of development. Cybercriminals have become adept at accessing confidential data through cryptographic keys, tokens, passwords etc. that developers often inadvertently leave behind on applications, sites, and files. And increased usage of disparate tools for automating and orchestrating adds to this risk.

Most of these tools like Ansible, Jenkins etc. lack a common workflow and standards for access management. With various development and deployment tools, lack of consistent workflows and large sets of teams each working on independent DevOps projects, admins often struggle to centrally manage secrets and provide access to the right people and teams.

How can DevOps teams address the challenges of securing data?

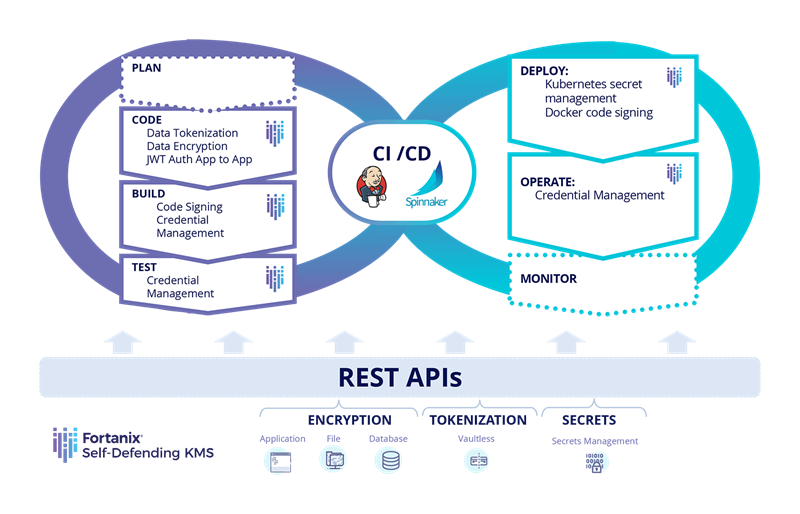

The answer is to integrate application data protection into every phase of the DevOps pipeline.

And this can be done by focusing on these 4 critical components of application data security:

- Secrets Management - Regardless, of what tools you may be using in your development pipeline, protecting credentials and API keys from compromise is critical. Secrets must exist outside the source code.

- Encryption – All application data should be encrypted. Integrating crypto into applications helps with encrypting the data in the coding phase. Also, wherever your secrets are whether on files, remote storage or CI/CD tools, they need to be encrypted and not stored as plain text.

- APIs and Plugins – Providing developers with the data security interfaces they need during applications development also helps.

- Data Tokenization – Substitute a token for sensitive data using a REST API to achieve privacy compliance. Sensitive data like credit card, social security numbers etc. can be masked during the coding phase to achieve compliance and protect application data with add an additional layer of security.

What is Data Tokenization and how does it work?

Data Tokenization has been a game changer in the data security sphere. Data Tokenization is the process of substitution of sensitive meaningful pieces of data, such as account number, to a non-sensitive and random string of characters, known as a ‘Token’. A token has no meaningful value if breached and therefore, can be handled and used as the original dataset. Tokens are stored in a ‘vault’ or a database that is used to establish the relationship between the sensitive data and the token.

The best real-world example is how it is used to protect data payment credentials. For example: In the payment world, the customer’s 16-digit Primary Credit Card Account Number is replaced with a custom, randomly generated alphanumeric number and stored in a secure virtual vault to enable online transmission of this data.

To retrieve the real data, for example, in the case of processing a recurring credit card payment- the token is securely submitted to the vault and relationship established with the real value. For the credit card user, this is a seamless experience through the application, and they wouldn’t be aware that the data is stored in a different format in the cloud.

Role of Data Tokenization in securing the application development lifecycle

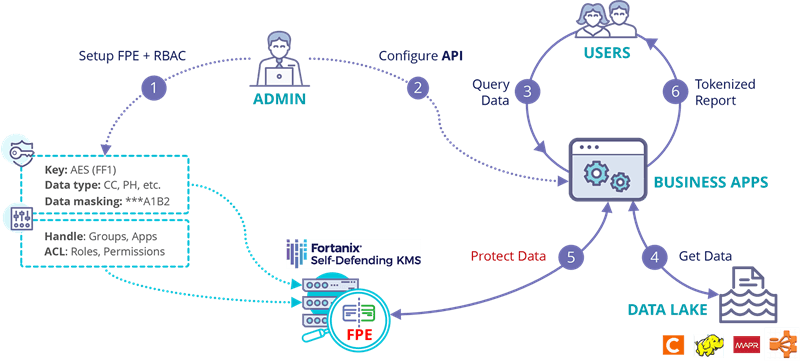

Here is a lifecycle diagram that explains how Data Tokenization works, in this case, within a Hadoop Data Lake. The diagram explains how a combination of Format Preserving Encryption (FPE) and role-based access control (RBAC) for application provided by Fortanix DSM helps to protect application data.

With Fortanix, relevant users can also get authenticated through RBAC, query the data, and tokenize on the fly.

In this short Video, Manas Agarwal, Technical Director with Fortanix, explains how Data Tokenization can play a critical role in the application development lifecycle with the help of a demo.

Some use cases and benefits

| Industry | Use Case | Benefits | ||

|---|---|---|---|---|

| Payment Card Industry | Protecting payment card data | Comply with Privacy regulations like PCI-DSS | ||

| Banking | Protecting Primary Account Numbers | Secure banking transactions and comply with regulations like PCI-DSS | ||

| Health Industry | Protecting SSN and Patient Records | Comply with HIPAA regulations by substituting electronically protected health information (ePHI) and non-public personal information (NPPI) with a tokenized value. | ||

| Ecommerce websites | Cards and consumer info on files can be fully tokenized | Increased customer trust as tokenization offers additional security and confidentiality of consumer information. |

Data Tokenization has long been treated as the poor relation of data encryption. But with increased proliferation of privacy regulations and compliance requirements, its importance in securing data within payment cards, mobile wallets and SaaS applications has been increasing. Data Tokenization can also be combined with data encryption to provide an additional layer of security that helps protect application environments against insiders having access to decrypted data.

Learn more about the Data Tokenization feature with Fortanix:

- The Fortanix DSM tokenization feature eliminates the link to sensitive data and is used in credit card processing to reduce or eliminate breaches.

- Learn how to secure legacy applications using Format preserving Data Tokenization.

Learn how Data Tokenization can anonymize sensitive assets, cut compliance costs, and support regulations like PCI DSS, HIPAA, and GDPR. Download this whitepaper.