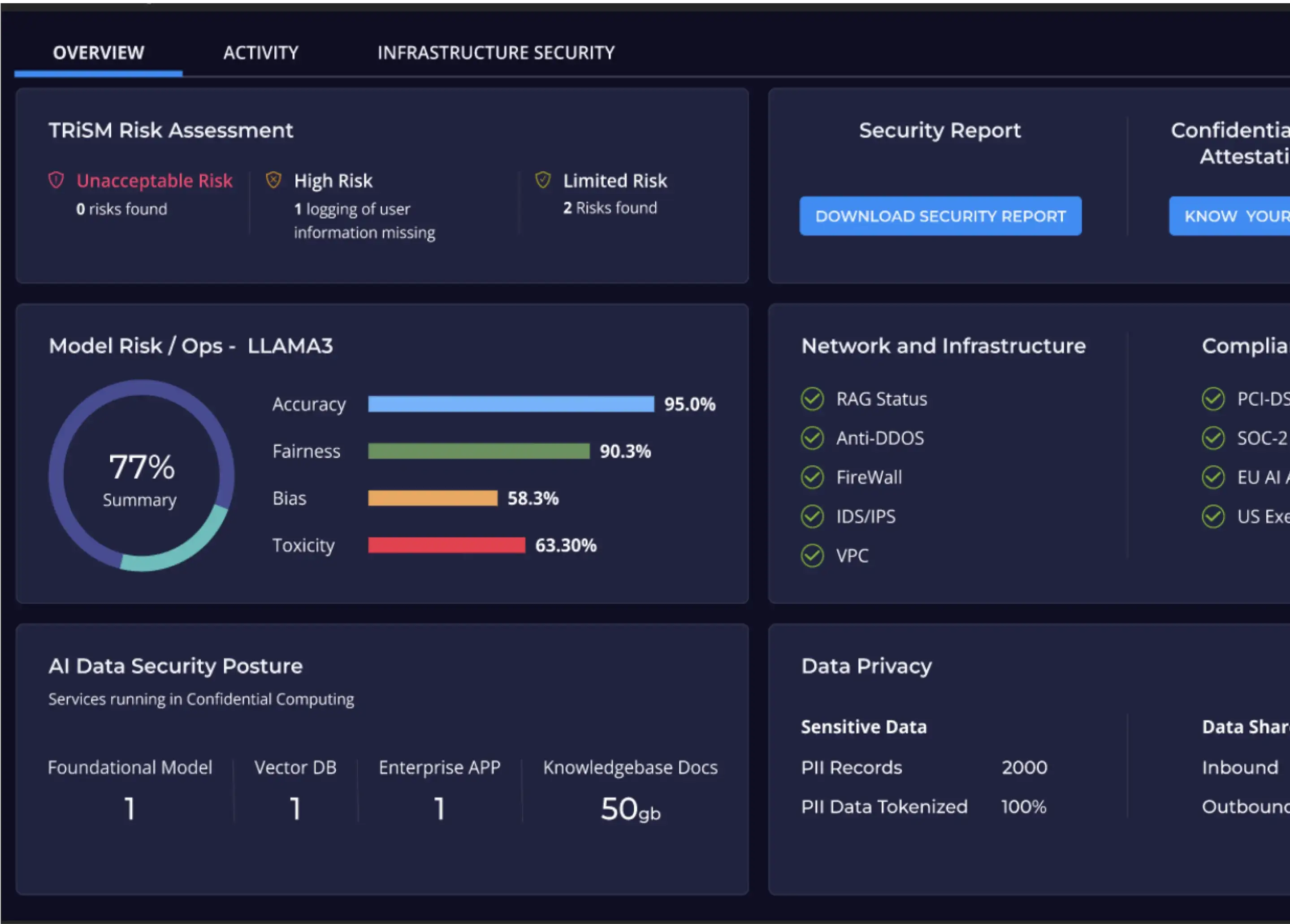

Keep sensitive data anonymous, portable, and compliant

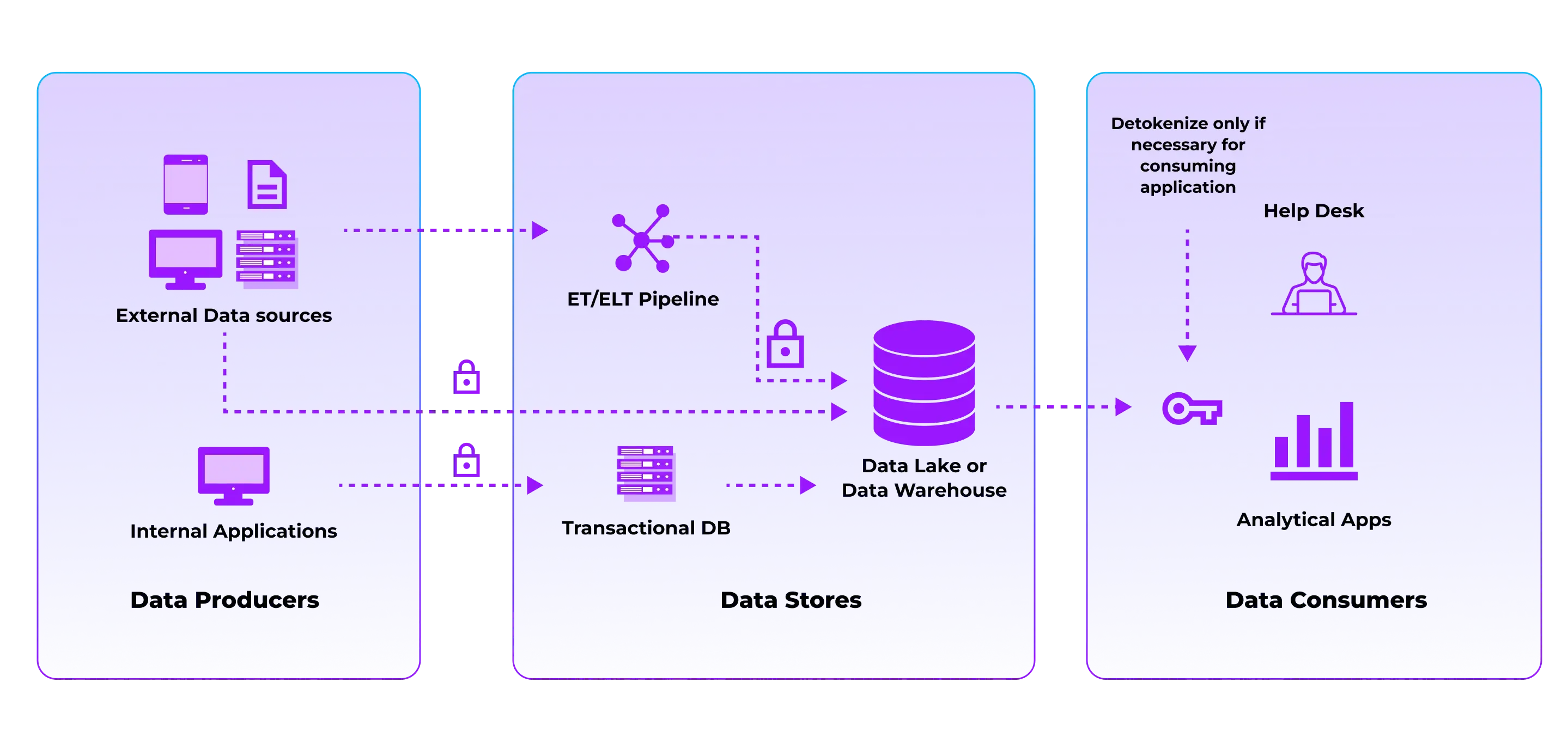

Data masking and tokenization is a critical technology that enables organizations to reduce the impact of a cyber-attack, meet data privacy laws, and harness the value of their data. Yet, many Data, Security, and Application Teams still grapple with data security and privacy—sensitive data is everywhere, legacy solutions cannot scale and meet the performance and agility demands of modern distributed workloads, and adoption of siloed point solutions often infringes corporate security mandates or data privacy regulations.

Solution

The Fortanix Data Security Manager, a unified data security platform, powered by Confidential Computing, allows teams to keep sensitive PII and PHI data anonymous, portable and compliant. Fortanix delivers secure and scalable data tokenization solutions by means of vaultless, NIST-certified FF1 Format Preserving Encryption (FPE). Data sets are replaced with surrogate values called tokens, which retain the same format as the original data but have no intrinsic value. This data tokenization approach preserves the integrity and structure of data, yet it is deidentified and safeguarded from unauthorized access.

With Fortanix Tokenization, teams now can safely share and use data, while reducing cyber risks and complying with privacy and security regulations.

Benefits

Mitigate AI Risk

Manage keys, tokens, and policies across all infrastructure in a single UI.

Enforce governance and compliance

Implement administrative safeguards and quickly create tokenization rules to accelerate compliance.

Increase agility

Automate processes with SDKs and REST APIs and seamlessly integrate with CI/CD and SIEM tools.

Key Features

Unify and simplify management with single system of record for keys, tokens, and policies

Seamlessly move de-identified data across data stores, workflows, and pipelines with vaultless, NIST-certified FF1 Format Preserving Encryption for high performance and scalability

Choose from SaaS or On-premises deployment options, featuring integrated KMS and HSM, with keys stored in FIPS 140-2 Level 3 HSMs

Mitigate risk with Zero Trust principles by applying Granular Role-based Access Control, Quorum Approval, and Key Custodian

Speed creation of rules for various mandates, laws, and regulations using pre-built and customizable tokenization policies

Accelerate Governance, Risk and Compliance processes by enforcing uniform policy across all environments

Aid audits and forensic analysis with Immutable audit logs for snapshots of all crypto operations

Leverage REST APIs for all crypto and management operations and SDKs to integrate with CI/CD, SIEM, and other tools to support low code development and automate workflows

DSM Accelerator option allows you to cache keys and tokenize locally at application with specified TTL (JCE, PKCS#11, REST) and meet stringent low-latency requirements.

Outcomes

Reduce the cost and effort for PCI-DSS compliance

Easily replace the 16-digit primary credit card account number with a vautless token and store securely to enable online transmission of this data.

PII Compliance (GDPR, CCPA)

Tokenize Personally Identifiable Information (PII) data to achieve compliance with a variety of privacy regulations, meet data residency requirements, and increase customers’ trust.

Accelerate HIPAA compliance

Comply with HIPAA regulations by substituting electronically protected health information (ePHI) and non-public personal information (NPPI) with a tokenized value.

Securely migrate to cloud

Fortanix features natively integrated FIPS 140-2 Level 3 compliant HSM, available on-premises or as SaaS, that enables separate store of tokens and encryption keys to give organizations control over governance and access.