Run agentic AI with turnkey orchestration, continuous CPU+GPU composite attestation, attested workload identity, and HSM-gated secure key access, evidence your approval gates can trust.

The Problem

Enterprises are pushing agentic AI toward production, but readiness reviews stall, especially in on-prem, air-gapped, sovereign environments, because teams lack continuous, machine-verifiable proof that the exact runtime handling sensitive data can be trusted. Real-world breach data shows both threat classes matter: external actors vs internal, and the average breach cost reached US$4.88M in 2024, with regulated sectors often higher.

At the approval gate, what’s missing is evidence for data-in-use protections, proof the workload runs inside an attested, hardware-based secure enclave (confidential computing), not just encryption at rest or in transit.

The cryptographic control must be anchored in a FIPS 140-2 Level 3-validated HSM, so that key release governing data at rest, in transit, and in use is provably enforced.

Finally, compounding this, the market lacks a turnkey platform for agentic AI with built in trust, security and sovereignty; analysts note today’s path from AI to agents is fragmented and toolchains lag maturity, keeping “agents” rare in production.

Why Confidential Computing matters for on-premises / air-gapped environment?

Air-gapped stops the internet; it does not stop local risk - root admins, physical access, or supply chain drift. Confidential computing adds hardware-isolated secure enclaves that keep data, prompts, model weights, and credentials encrypted in use and provide verifiable proof of the runtime. Measured boot and offline attestation mean nothing is decrypted until the stack is proven. Attestation-gated key release and sealed storage bind secrets to a specific platform and measurement, so disk clones or firmware rollbacks fail. Composite CPU+GPU attestation verifies hosts and accelerators before loading crown-jewel IP; credentials stay inside the enclave as short lived, scoped tokens.

Our solution

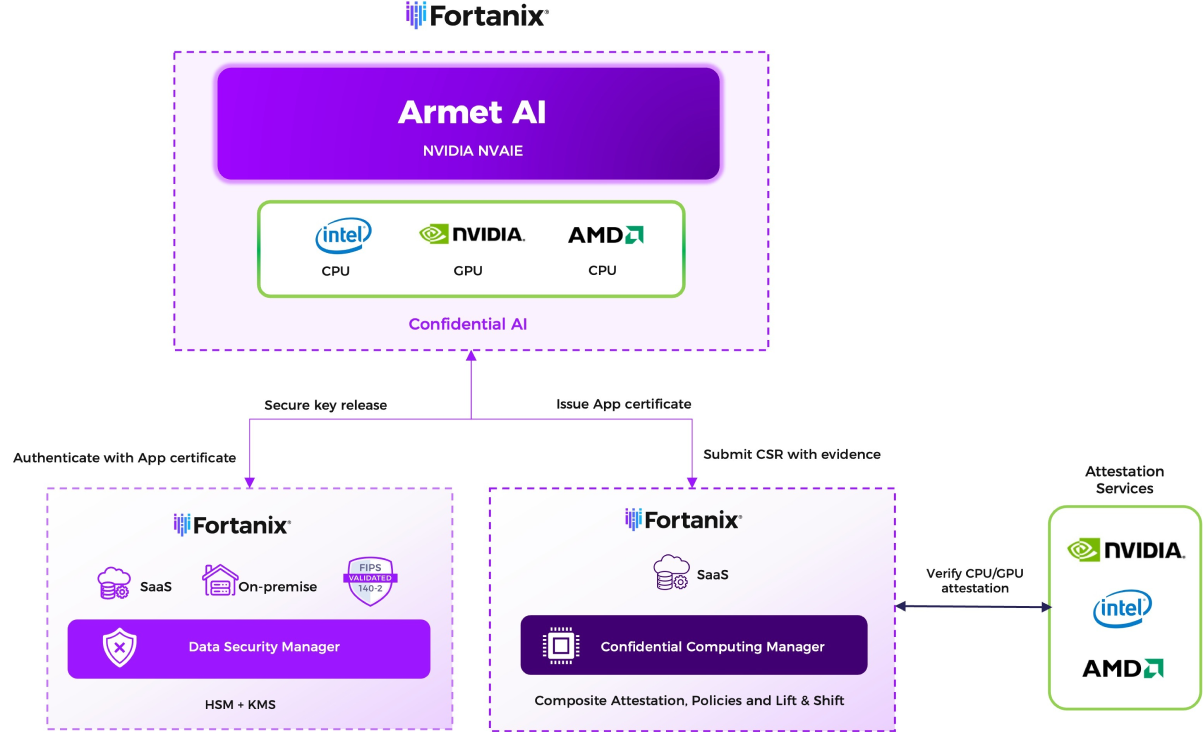

Fortanix delivers Armet AI an evidence-first platform for agentic AI that runs where the data lives; on-prem, air-gapped, sovereign. Armet AI combines NVIDIA Confidential Computing with turnkey orchestration, so every workload proves its state before anything sensitive moves. Runtime trust is established across CPU and GPU, bound to a cryptographic identity, and enforced by policy, so keys, datasets, and model artifacts are accessed only by trusted services. The same chain of evidence feeds unified audit and approval gates. The result is a practical path from pilot to production, with verifiable trust, security, and sovereignty on the NVIDIA AI stack your teams already operate.

Components

NVIDIA Confidential infrastructure: The NVIDIA Hopper and NVIDIA Blackwell GPUs with NVIDIA Confidential Computing capabilities enable agentic services to execute inside hardware-enforced Trusted Execution Environments (TEE). Firmware and images are attested at launch and continuously evidenced. Even in restricted networks, the environment preserves proof of attestation and accepts guarded trust updates, revocations. The result is the same operating model on-prem with reliable time sync and identity in restricted networks.

Turnkey orchestration: A production layer for agentic workflows that runs where the data lives - on-prem, air-gapped, sovereign. Teams work in projects with clear roles and runbooks; services are brought up the same way every time; connectors, guardrails, approvals, and observability map to your operating model. Role based access and change control are built in, and everything is API-first, so automation fit naturally. The result is repeatable patterns that move pilots to production with confidence, not custom glue.

Fortanix Confidential Computing Manager (CCM): The trust and policy plane of the stack - the place where hardware evidence becomes enforceable runtime decisions. It unifies CPU attestation (Intel TDX, AMD SEV-SNP) with GPU attestation (NVIDIA NRAS), runs continuous posture checks, and issues short lived Remote Attestation TLS [RA-TLS] workload identities so identities reflect proven runtime state. It works the same in constrained networks using local verification and pre seeded trust bundles for air-gapped sites. Evidence is normalized to standards, logs are signed to your SIEM for a tamper evident trail, giving security and operations teams an API first, audit ready control plane.

Fortanix Data Security Manager (DSM HSM + KMS): The system of record for keys and cryptographic policy, anchored in a FIPS 140-2 Level 3 validated HSM. It keeps custody of encryption keys, tokens, and secrets and releases them only when a requesting service presents an approved, attested workload identity and policy allows it, so access to data at rest, in motion, and in use is provably governed. DSM brings crypto agility (algorithms and rotations), and dual control/quorum for high-risk actions. Every operation emits signed, tamper-evident logs to your SIEM, supporting clean separation of duties and audit. Built for regulated operations, DSM offers high availability and disaster recovery options and maintains confidence that keys never leave controlled custody.

How it works

1. Start where the data lives: Stand up the environment in your own data center or AI factory, on-prem or air-gapped, using the same operational patterns your teams already trust. Turnkey orchestration brings up the CC ready serving stack with NVIDIA GPUs that can enable running the agent services from NVIDIA AI Enterprise without duct tape or one-off scripts.

2. Prove the platform before it touches anything sensitive: Composite attestation checks the enclave on the CPU and the confidential state of the GPU against approved references. In air-gapped sites, a local verifier uses pre seeded trust bundles, so proof does not depend on the internet.

3. Bind that proof to a real workload identity: A short-lived RA-TLS certificate, think of it as a cryptographic passport that says this exact workload, in this enclave, is approved right now. Identity is no longer a hostname or a role; it is tied to proven runtime state.

4. Permit access by policy, not assumption: Keys, secrets, datasets, and model files are released only when the requester presents a valid attested identity, and the policy says yes. The HSM-gated model means the decision fails closed no proof, no access - so data at rest, in motion, and in use remains under measurable control.

5. Do the work inside the enclave: Agents decrypt and process data and model artifacts only inside the secure enclave. Guardrails and role-based controls travel with the workload.

6. Leave an evidence trail approvers can trust: Every check and decision is logged in one place - who, what, where, when, and why - for production readiness reviews, audits, and incident response.