In my last blog, I described how Fortanix helps customers migrate their workloads across environments of all shapes and sizes—be it on-premises to cloud or between cloud environments.

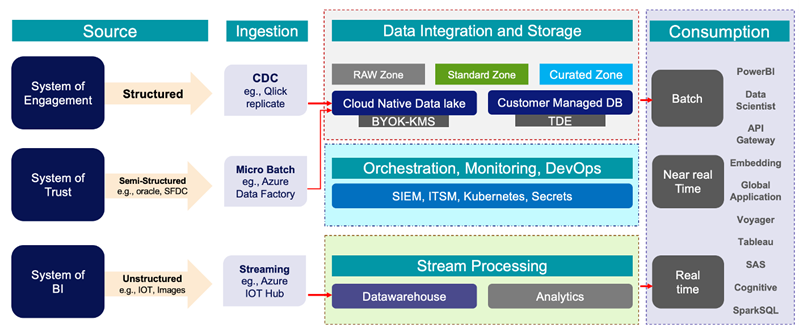

The figure below depicts how during a migration process, data from structured/ semi-structured/ unstructured sources are ingested either to cloud-native data lakes (Azure Synapse, Big Query, and AWS Redshift or Glue) or customer managed databases such as Oracle or MSSQL, using an ingestion layer in between.

Why Database Protection Is Essential in Modern Data Lakes

Data lake/ Big Data is an emerging set of technologies enabling organizations greater insight into their ever-growing data, to drive better business decisions and greater customer satisfaction.

However, the aggregation of data in these lakes also makes them an attractive target for hackers. Organizations should be able to handle this data efficiently and must protect database assets and sensitive customer information to comply with local and international privacy laws and compliance requirements.

Securing big data is difficult because of multiple reasons:

- There are multiple feeds of data in real-time from different sources with different protection needs.

- There are multiple types of data combined.

- The data is being accessed by many different users with various analytical requirements.

There are multiple ways to achieve database security in Datalake Environment:

-

Database encryption: There are several techniques available for database encryption including Transparent data encryption (TDE) and Column-level encryption. TDE is used to encrypt an entire database. Column-level encryption allows for encryption of individual columns in a database.

-

Application-level encryption: This encryption uses APIs to protect data at the application side.

-

Tokenization: Also known as Format Preserving Encryption (FPE) encrypts the data without changing the original data format. This allows the applications and databases to use the data. Data protection is applied at the field level which enables protecting the sensitive parts of the data and leaving the non-sensitive parts for applications.

As large volumes of data from an array of sources like machine sensors, server logs and applications flow into the data lake, it serves as a central repository to a broad and diverse dataset.

The data lake needs a database protection with comprehensive security as it houses vital and often highly sensitive business data. Data can be protected at multiple stages, e.g., data before entering the data lake, while entering the data lake or after it has entered the data lake.

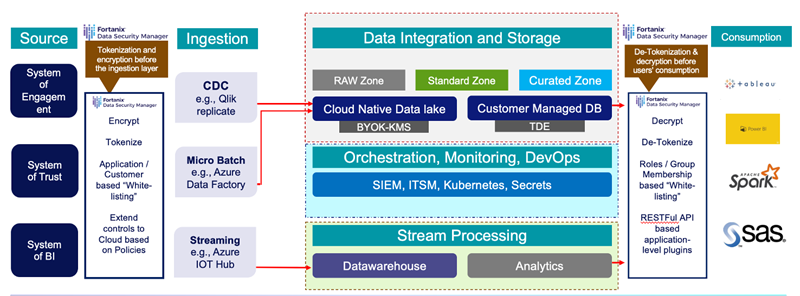

Referring to the image below Fortanix provides data driven security to data that is stored/ processed in data-lakes.

Best Practices for Database Security with Fortanix DSM

Fortanix DSM enables the following use-cases:

-

Encryption/ Tokenization at the source application: In this scenario, the data is encrypted before importing into Cloud Native Data Lake. This ensures that data is protected throughout the entire data lifecycle as data lands on the Lake. This option requires an interface to the source applications for encryption and tokenization which can be done by API level integration with Fortanix. The protected data is then imported into Data Lake.

-

Encryption within Datalake: This option protects data fields once they are identified in Datalake. There will be integrations with different Cloud KMS such as Azure Key Vault, Amazon KMS and Google EKM. Basically, cloud KMS provider automatically protects data using a symmetric or asymmetric keys. Using Fortanix BYOK – DSM integrates with Azure AKV/ AWS KMS to protects data using master keys generated from DSM and stored in FIPS 140-2 level 3 certified outside of CSP’s control.

-

Storage-Level Encryption: The storage level encryption protects data after physical theft or accidental loss of a disk volume. This option uses Transparent Data Encryption (TDE) within Data Lake to create a secure database environment. Using Fortanix DSM and for better security, keys will be managed on Hardware Security Modules when using TDE.

How to Protect Database Workloads Using BYOK in Azure Synapse

For this blog, I used Azure Synapse integration with Fortanix to showcase BYOK operations to protect data during the at-rest and before consumption layer (such as PowerBI). We shall look at a use case to protect data within an Azure Synapse Workspace and storage level encryption (data lake) when the data is written or imported onto it using a key created in Fortanix DSM. Please see the diagram below:

There are two things that we would see in this blog/ demo – ease of use for BYOK operations and integration with Azure Synapse. For more information on Azure Synapse and Fortanix BYOK

Azure Synapse: Azure Synapse Analytics | Microsoft Azure Fortanix BYOK: User's Guide: Azure Key Vault External KMS

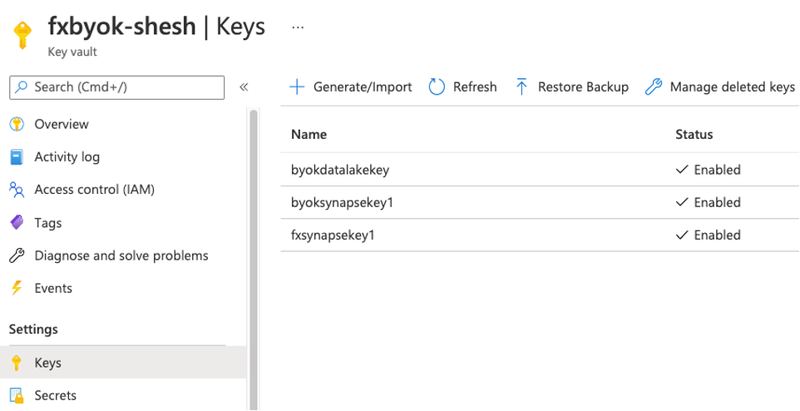

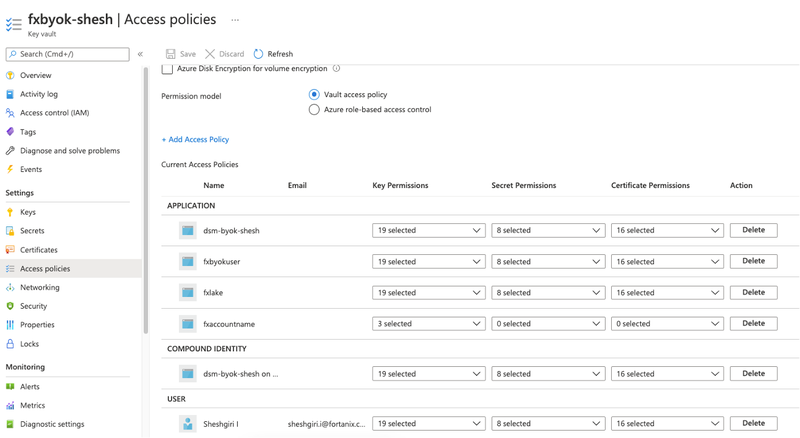

First things first: I integrated Azure Key Vault with Fortanix using Cloud Data Control feature of DSM. After logging in to the DSM cluster, I added Azure Key Vault – fxbyok-shesh to Fortanix. Since it’s an application that invokes encryption and decryption, you’ll need to create apps under Azure app-registration and allow it contributor access to Azure Key Vault.

Figure: Creation on Azure Key Vault – fxbyok-shesh

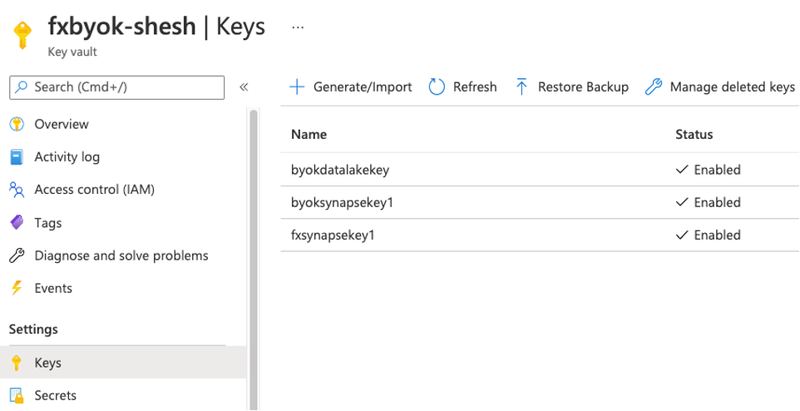

Once the Key Vault was created, I created few keys on it.

Make sure to add the app as contributor role under Key Vault access policies. In this case the app name is dsm-byok-shesh

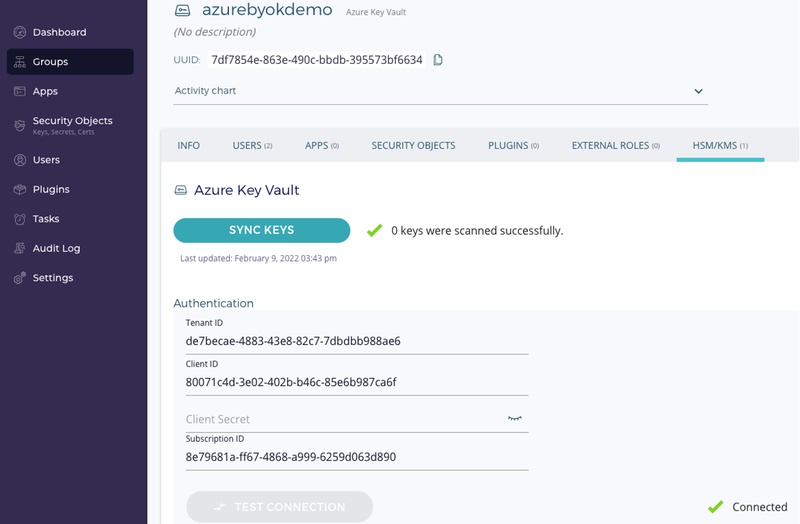

At the DSM side (see figure below) create a group and map it with Azure Key Vault – fxbyok-shesh using the application details such as Tenant ID, Client ID etc.,

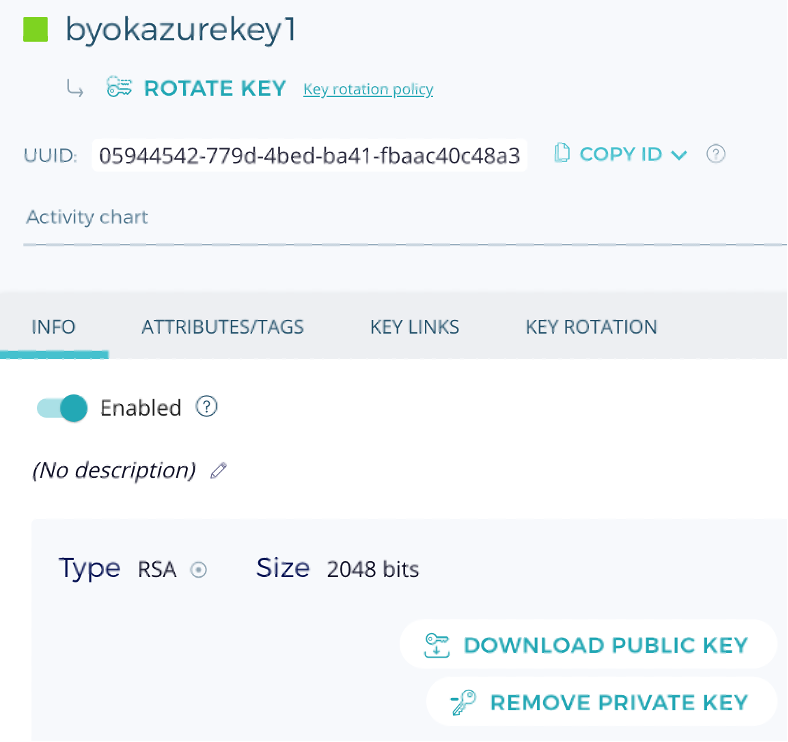

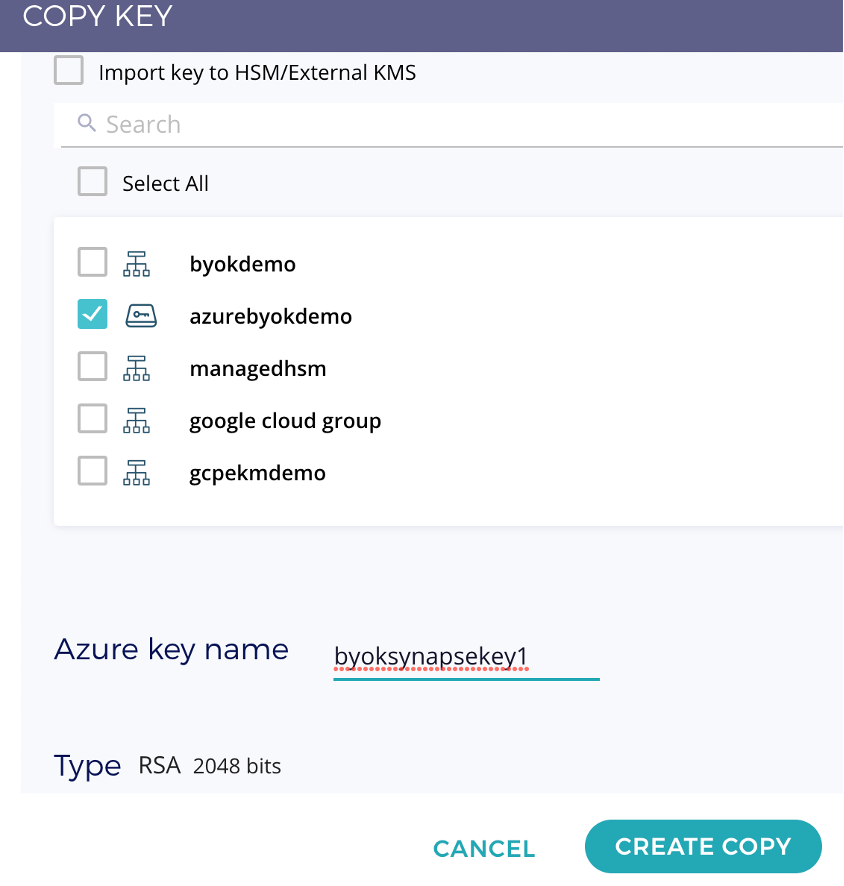

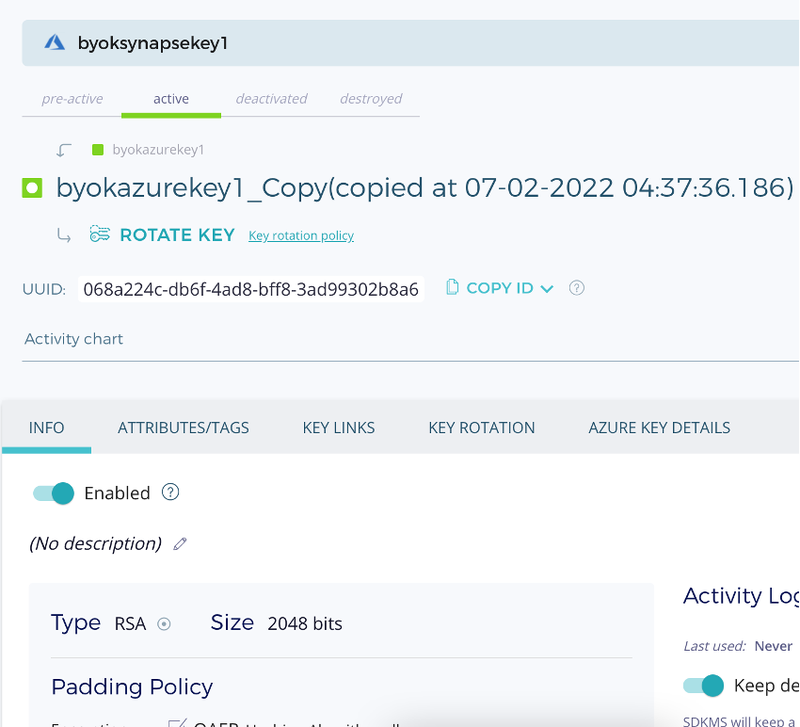

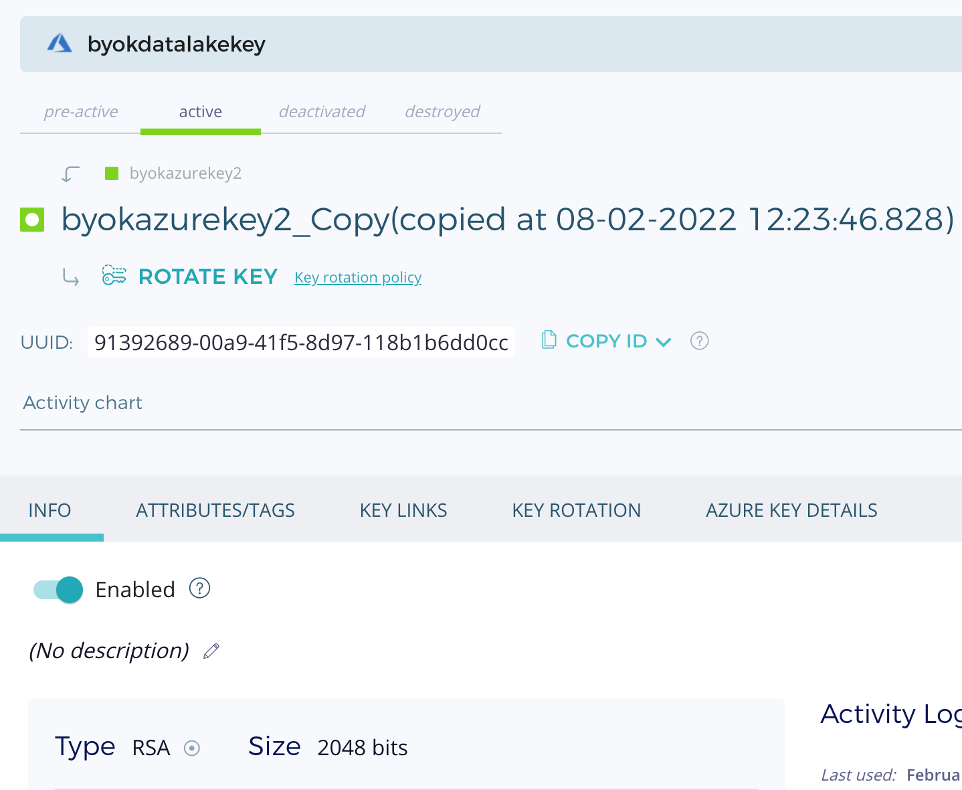

The next step is to create an asymmetric RSA key on Fortanix and copy (BYOK) it to Azure Key Vault as an external key. The same key will be used to encrypt all the SQL database created inside the Synapse Workspace.

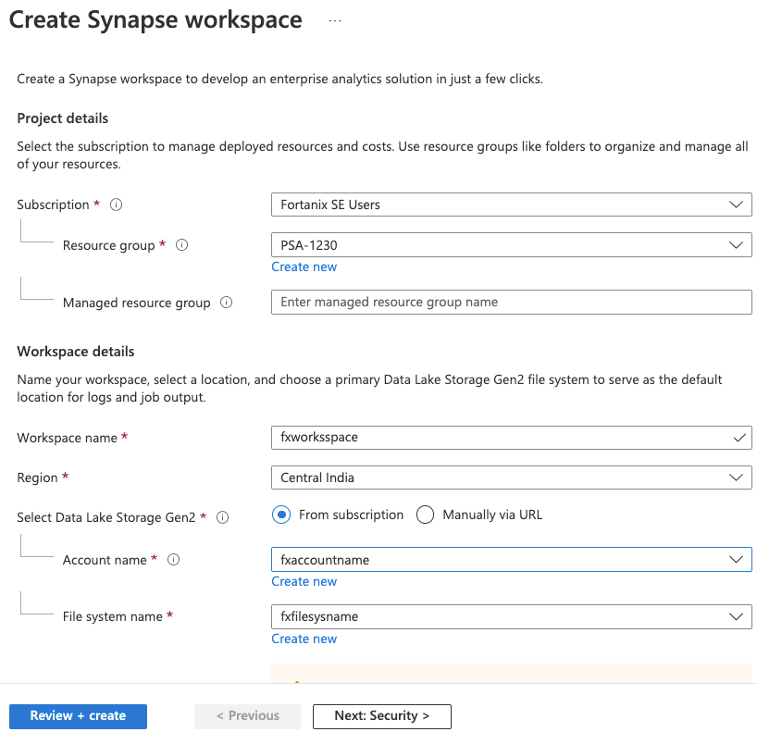

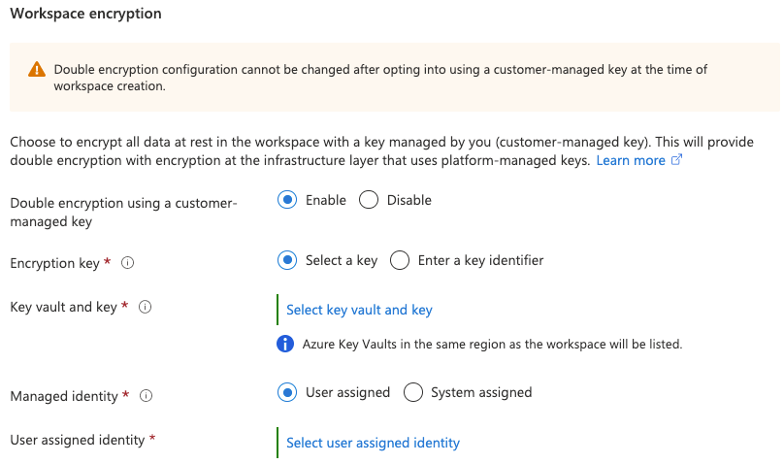

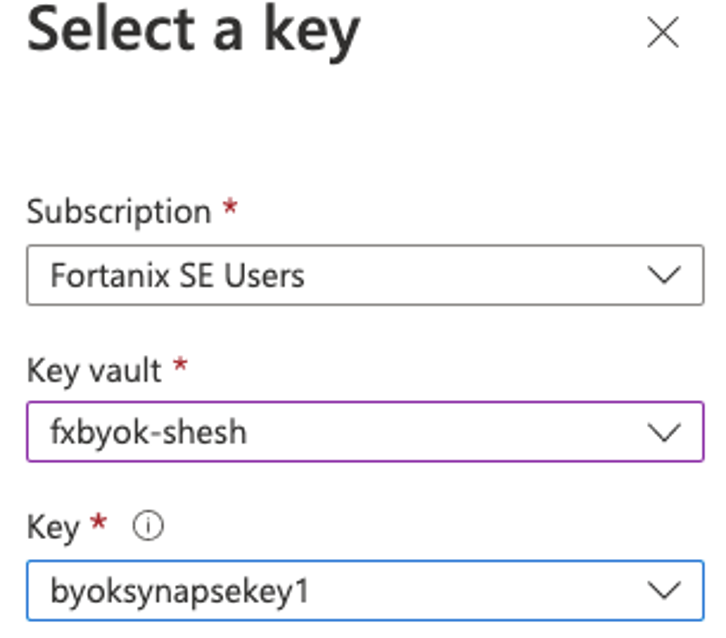

The next step after this integration – is to create an Azure Synapse Workspace, I created the workspace in Central India, along with Data Lake (called as fxaccountname) a Gen2 Storage account. At the security tab, select key vault(fxbyok-shesh) and key(azuresynapsekey1) - See the below figures.

Select the keys for the workspace that was copied from Fortanix onto Azure Key Vault.

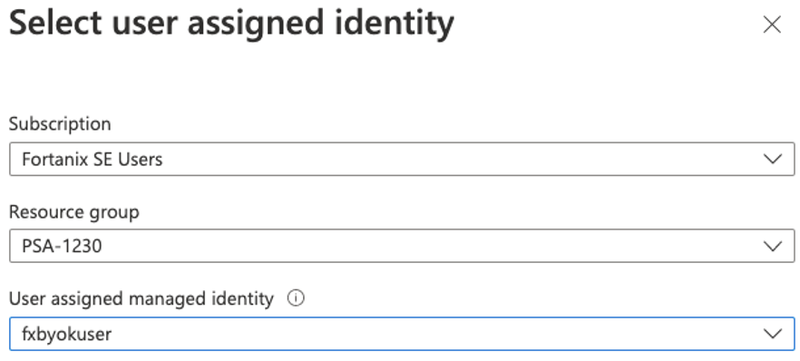

Make sure the Key Vault has Managed Identity user added under access policy to have access to the workspace

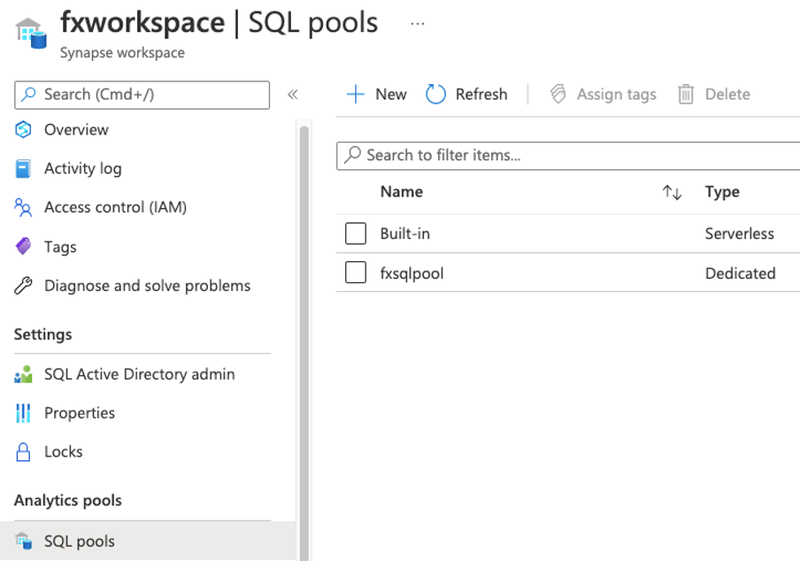

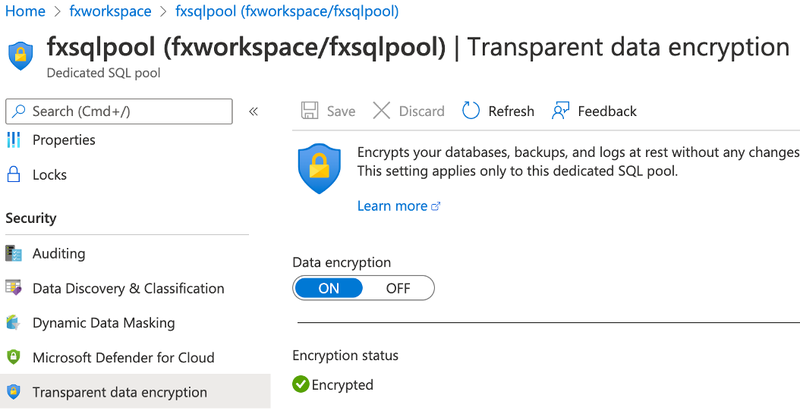

Once the workspace is deployed, create a SQL pool (from workspace), and enable TDE on the database. This will ensure all the data on the database is encrypted and will be in CipherText.

I created a SQL Pool under the workspace and enabled TDE.

The above use-case encrypts data at rest on SQL pools (serverless or dedicated).

The second use case as described in this blog is to encrypt the datalake storage using Fortanix BYOK workflow. After the ingestion when data directly lands on datalake, users in the consumption layer can use Fortanix external (BYOK) keys to decrypt the information for applications such as PowerBI, Tableau. etc.

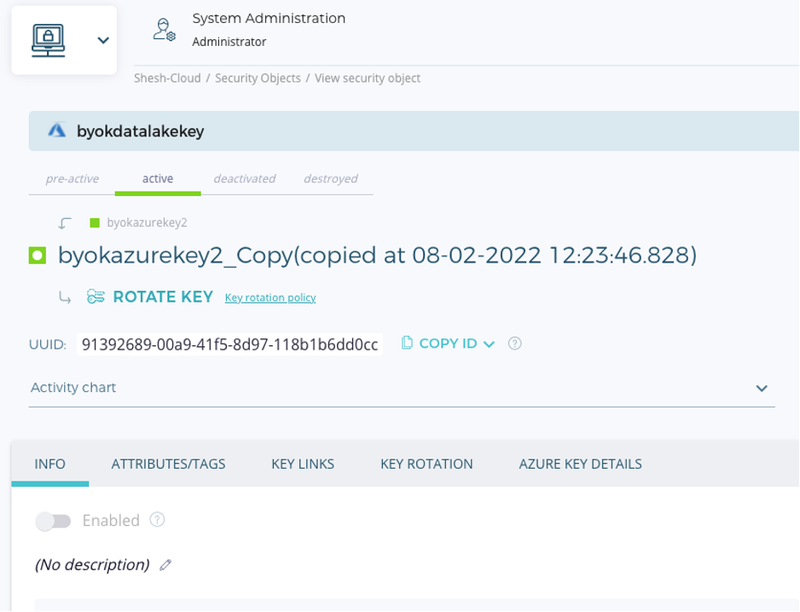

Create an asymmetric key on DSM and copy (BYOK) to Azure Key vault(fxbyok-shesh) and this key will be used to encrypt datalake fxaccountname.

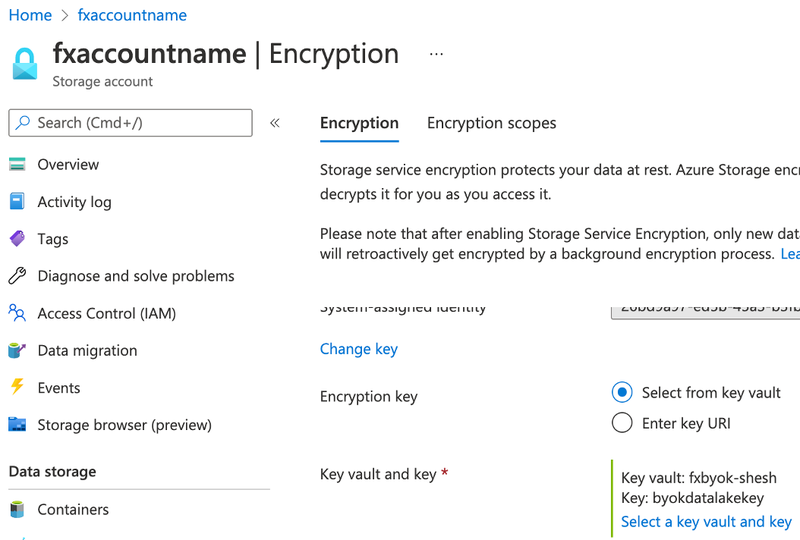

Under the storage account (in Azure Subscription) – go to data lake storage “fxaccountname” and enable encryption choosing the Azure Key Vault and external key byokdatalakekey (key created on DSM).

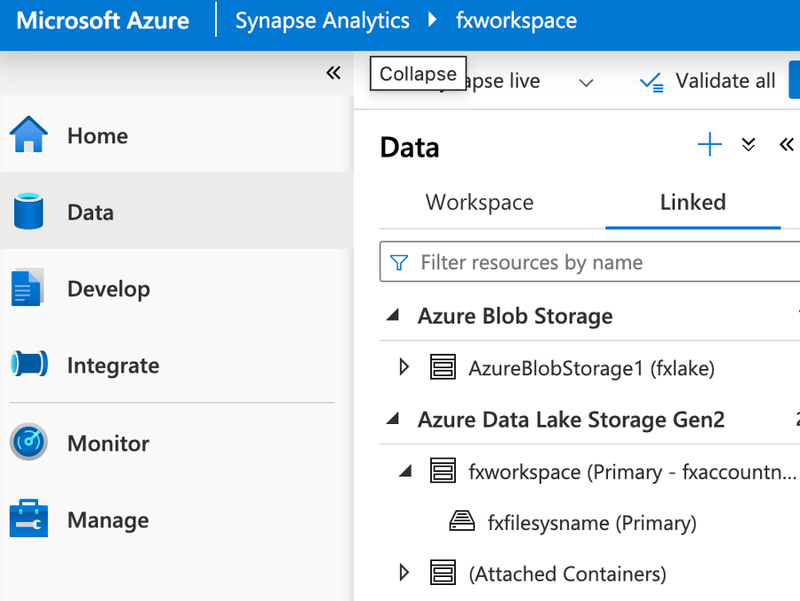

Once the encryption key is mapped to the data lake, open Azure Synapse Studio and link the Azure Data Lake Storage Gen2. Make sure the manage identity user is mapped to the Data Lake storage.

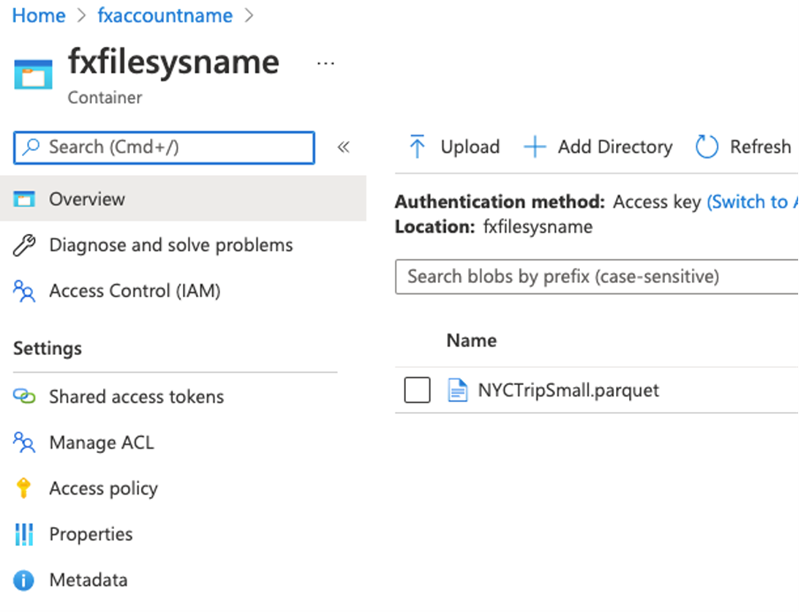

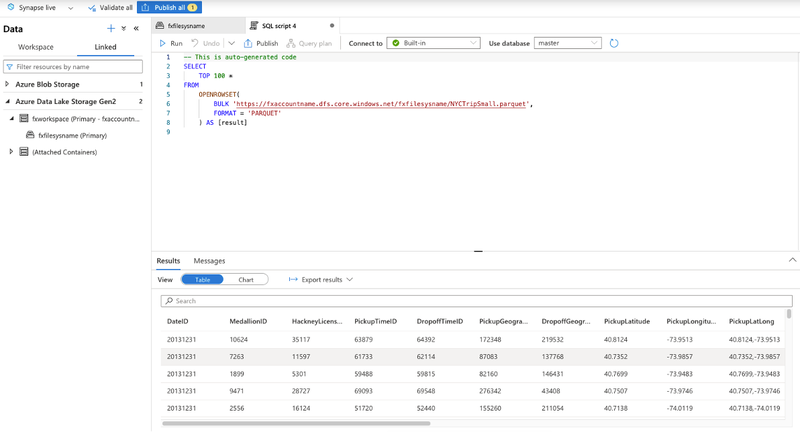

To check whether encryption is working and the use case to encrypt data written on data lake using Fortanix DSM keys (as explained in page number 2) – I linked an external Data Lake to Azure Synapse Database. I uploaded data in the form for a parquet file to the Azure Data Lake Storage. The taxi data schema includes - records fields capturing pick-up and drop-off dates/times, pick-up and drop-off locations, trip distances, itemized fares, rate types, payment types, and driver-reported passenger counts.

Using Synapse Studio, I mapped Azure “fxaccountname” (Data Lake storage) to the Workspace.

Once the data is attached, I ran TOP 100 rows SQL query to find the top 100 rides. In the figure below, the query completed successfully.

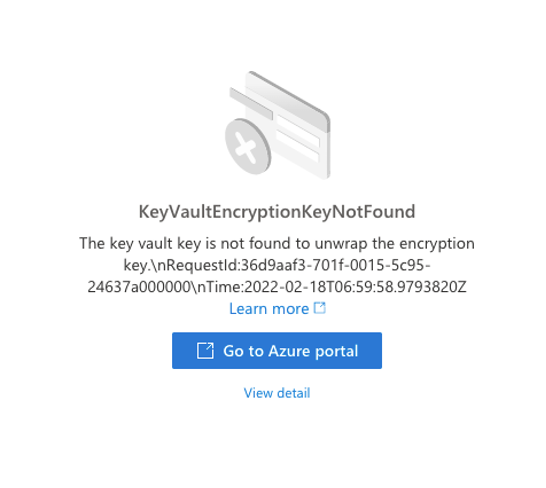

To substantiate Fortanix BYOK workflow, I disabled the Security Object – “byokdatalakekey” from Fortanix DSM UI to check whether encryption is working. The ideal behavior would be that one can’t run any queries on the data lake storage once SO is disabled inside DSM. This is exactly the behavior we saw during the integration, the query failed as it was unable to find Key Vault Encryption Key to unwrap the encrypted data – see below screenshots of Azure Synapse Studio.

The above tests provide the following benefits:

- Unified Security across clouds

- Separate keys from data

- Improve HA/ DR for critical cloud infrastructure

Building a Secure Database Strategy Across Multi-Cloud Environments

When you design your encryption scheme, Fortanix DSM (KMS) can help you to integrate and manage your encryption in a secure, reliable, and highly available way.

All cloud services (Azure Data Lake, AWS RDS, Azure VM’s etc.) can be encrypted using an external key to have one place to manage your encryption keys, security policies, and access logs. Customer-managed keys provide an extra level of database protection for customers with sensitive data.

With this feature, the customer manages the encryption key themselves and makes it accessible to the data lakes If the customer decides to disable access, data can no longer be decrypted. In addition, all running queries are aborted.

This has the following benefits for customers:

- It makes it technically impossible for data lakes to comply with requests for access to customer data.

- The customer can actively mitigate data breaches and limit data exfiltration.

- It gives the customer full control over data lifecycle.

I understand you may already have plenty of queries, and we would love to take those.

You can begin by reading the datasheet here.