Sixty years ago, on July 12, 1962, the first-ever live television signal was beamed across the Atlantic Ocean. And while it wasn’t exactly the first case of real-time streaming, it was undoubtedly one of the historic days for globalization as we were shepherded into a new era of not just a connected world but real-time video streaming.

The term live-streaming was vastly commercialized, if not coined. Are all these years then, not long enough for exploring every aspect of streaming and covering the ends of all shortcomings? If we talk about the challenges and data security of real-time streaming, would it be synonymous with beating a dead horse? Apparently not.

Why Real-Time Data Stream Security Matters

Real-time data streams revolutionize data analytics and machine learning. They enable organizations to react instantly to crucial insights, optimize operations, and gain a competitive edge. Real-time machine learning enables training models through live incoming data streams to continuously improve the model by adapting to new patterns.

However, this agility comes at a cost: the potential exposure of sensitive data during processing.

Traditional security measures often fall short in this context. Encryption at rest might not be enough. Malicious actors could potentially exploit vulnerabilities within the processing environment to access sensitive data in real-time.

This can be extremely valuable in industries such as healthcare, energy, manufacturing, and others where new innovations bring in data agility with time. Data streaming is one of the prominent disruptive inventions that bring much-needed agility to data pipelines for machine learning and data analytics in general.

One area though that stays marginally unexplored is ensuring the privacy and confidentiality of sensitive data streams across their lifecycle. The same analytical insights that can so immensely benefit an organization can work against them if these fall into unauthorized hands. How do we then ensure data security and confidentiality with applications that consume real-time data streams?

A simple one-line answer would be by running the consumer applications in trusted execution environments that offer encryption for the data being consumed as well as the applications. Several advanced data security platforms enable applications to run within secure enclaves, some of the prominent ones being AWS Nitro and Intel® SGX.

Confidential Computing: Securing Your Data at Runtime

Fortanix Confidential Computing offers a powerful solution for securing real-time data streams. It leverages Trusted Execution Environments (TEEs) to isolate applications processing sensitive data. This isolation ensures:

- Data confidentiality: Even in a compromised environment, the data remains encrypted at runtime, inaccessible to unauthorized users.

- Code integrity: Only authorized code can execute within the enclave, preventing malware injection or tampering.

Securing Real-World Scenarios with Fortanix

With Fortanix, the secure enclaves enable applications to run in confidential computing environments, verify the integrity of those environments, and manage the enclave application lifecycle.

Here I will explore the three most common scenarios with real-time data streaming and how Fortanix confidential computing can secure the data and application code by isolating it to prevent unauthorized access and tampering at runtime (even when the underlying infrastructure may get compromised).

Data Streams from Transactional Systems/Applications

Any system that generates transactional data is a source of real-time data streams. OLTP systems such as payment processing applications, ATMs, point-of-sale systems, traffic from an eCommerce website, or data streams through files/logs are common examples of transactional data streams.

Since these transactional systems generate business/IT data, real-time consumption of this data is extremely important to decision makers for supporting live, in-the-moment crucial decisions. The data needs to flow from the source app to target apps that process this data by computing on it.

A widely used way of stream processing, in this case, is through Apache Kafka®. Over the last decade, since being open-sourced in 2011, Kafka has swiftly evolved from messaging queue to a full-fledged event streaming platform for the transaction as well as mission-critical applications.

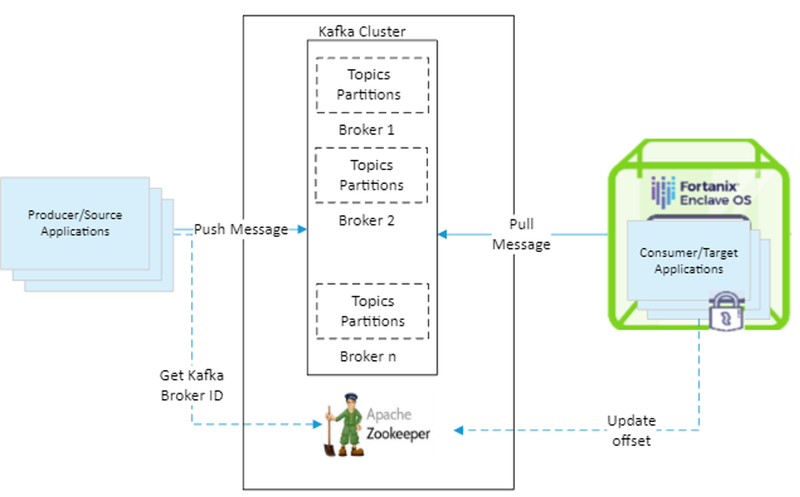

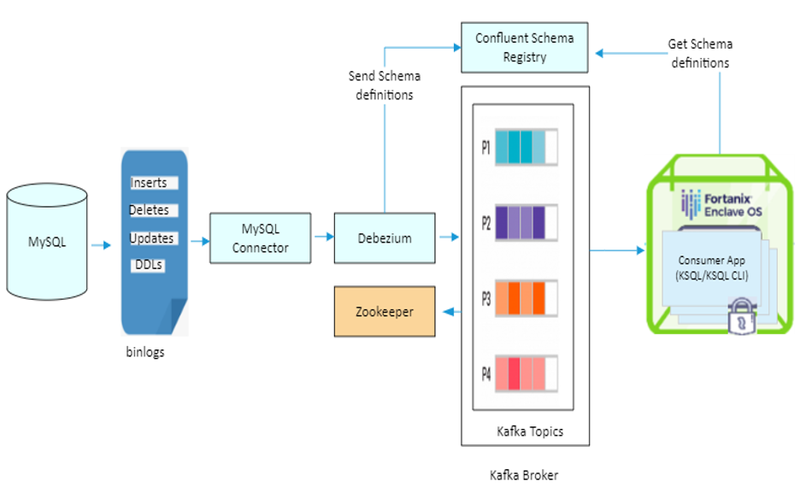

A basic simplified architecture with Kafka with its consumer application running in a trusted execution environment is shown here. In this classic workflow of Kafka stream, Fortanix Enclave OS adds much-needed data security and confidentiality.

In this case, the consumer application must be containerized and converted to run inside an enclave using Fortanix’s confidential computing solutions. Once real-time data streams flows inside a secure enclave, it can be consumed by any application to draw key insights from it or can be redirected to tertiary sources upon further processing/transforming it.

Some examples of such transformations include encrypting sensitive data/PII before sending it to the cloud, strip/mask sensitive fields or a simply aggregate if the volume must be contained.

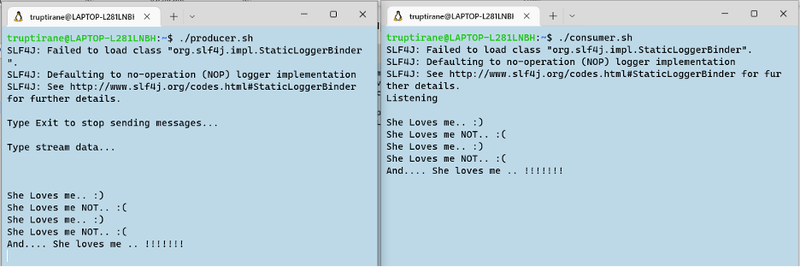

In what scenarios would you need all: time-criticality, data privacy, and confidentiality, one may ask. The snapshot below pretty much sums it up!

Things of the Internet-of-things (Operational Data from IoT Devices)

One cannot possibly talk about real-time data streams and not talk about IoT. Digitalization has swept the entire world off its feet, and the world has not landed back on its feet ever since. IoT not only plays a major but the lead role in making digitalization possible.

These connected IoT devices, be it plugs attached to operational devices, sensors associated with machinery, or production line artifacts generate digital operational data which is used in several use cases such as remote monitoring, predictive maintenance, operations optimization, and facilities management.

The powerful, immediate insights that can be garnered from this data can give away sensitive information about how organizations function such as operating conditions, precise location of production lines and analytics derivatives. IoT is not just about collecting data, it is about collecting a lot of data and making sense of these humongous data streams.

When it comes to IoT, MQTT is the most used messaging protocol with a set of rules that describes how IoT devices can publish and subscribe to data. It is implemented as a particularly lightweight publish/subscribe messaging transport that is perfect for connecting IoT devices with a minimal code footprint and network bandwidth.

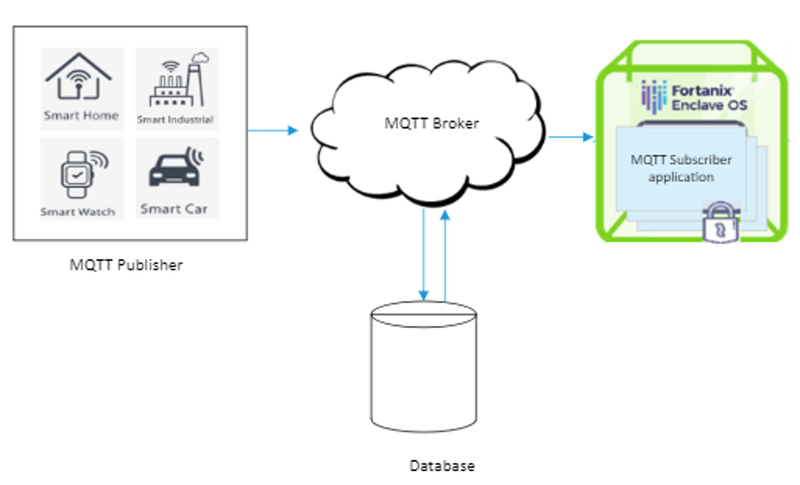

Shown here is how Fortanix Confidential computing can support MQTT Subscriber applications to consume real-time IoT sensor data and process it as necessary to draw key insights through computation while running in a secure enclave while enabling a fault-tolerant publish-subscribe MQTT architecture.

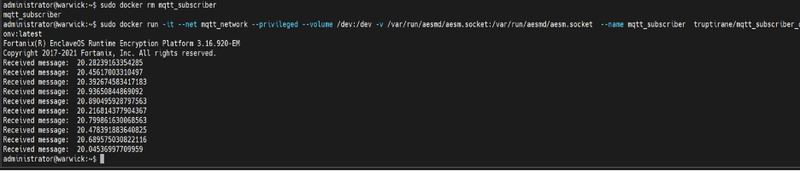

Demonstrated below is how a sample MQTT subscriber application securely receives messages channeled through an MQTT broker. This example uses the open-source eclipse mosquito public broker mqtt.eclipseprojects.io to broadcast messages for demonstration.

In this case, we chose to print the received messages out on the console, but the subscriber can process the published data in any way necessary while Fortanix EnclaveOS provides it with its exceptional runtime encryption.

Similarly, the broker can also persist the data in a database/on-cloud with suitable at-rest data protection in place.

Change Data Capture from relational databases

Data management has been a nuisance since the early days when mainframe computers had to bring together data from a multitude of sources to do even the simplest month-end reporting. And even though enterprises all around us are today moving towards the cloud, there still exists a world that is pretty much grounded.

Yes, I am talking about the on-premises traditional databases and datastores that get populated with data through different legacy applications. How can we consume real-time updates to these datastores in our computing applications? The answer is Change Data Capture (CDC). CDC makes it possible to identify and capture changes to data in a database and then delivers these changes in real-time to a downstream process or system.

In this case, I have used MySQL as an example of a traditional database. MySQL can support CDC using binary logs (binlog) that record all operations in the order in which they are committed. This includes changes to both, the table schema, and the data in tables.

Here, I have used the Debezium MySQL connector that reads the binlog, produces change events for row-level INSERT, UPDATE, and DELETE operations, and emits the change events to a Kafka topic. The consumer applications can then read data published to this Kafka topic.

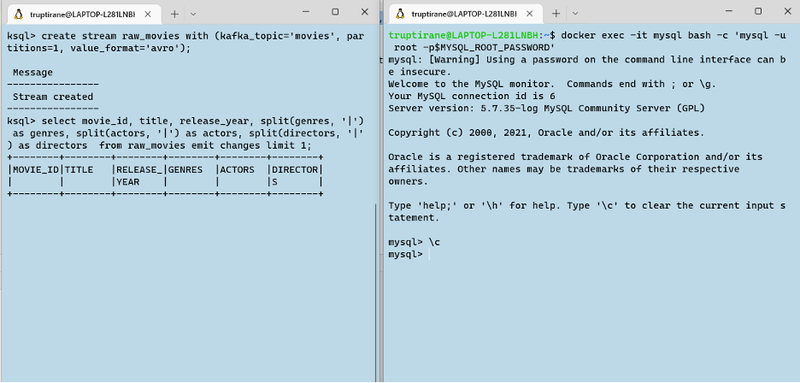

Usually, data analysts use APIs for stream processing and analytics operations on real-time data stored in Kafka servers. To implement this though, one should have a strong technical hold of programming languages like Java or Scala. To eliminate this restriction, Confluent introduced KSQL which enables performing Data Analytics Operations as SQL queries.

KSQLDB has a server component and a command-line interface (CLI) component. The KSQL CLI sends the query to the Cluster over the REST API. The consumer application in this case is a combination of the KSQL server and KSQL CLI. Both these applications are converted using Fortanix CCM so that they can run inside a secure enclave OS.

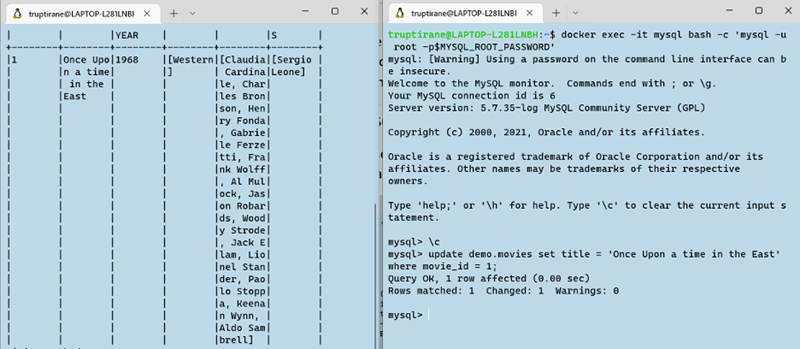

In the next snapshot, we update the movie with movie_id=1 to set the title as 'Once Upon a time in the East'. The data is seen flowing in real-time in the KSQL "raw_movies" stream we just created.

Summary

Using three common means of real-time data streams, we demonstrated how Fortanix confidential computing solution can successfully protect the data privacy of real-time data streams and help consume it securely. These scenarios are not the only possibilities for secure consumption of real-time data using Fortanix.

Rather, there are many other ways that real-time data can be integrated securely using our suite of products. This blog is merely an attempt to kindle romance and mark the beginning of venturing into new avenues.

Eminent American scholar William Edwards Deming famously quoted – “Without data, you're just another person with an opinion". Well, without data, your opinion may at least be right in some instances but with unreliable data, even that is a very distant and remote possibility.

If your organization thrives on data-driven technologies such as AI, ML and IoT—take a data-driven approach to cybersecurity. Read this eBook to know more about what confidential computing is, how it works, and the business value it delivers.