Why is hardware-based trust becoming the foundation for secure, sovereign and trusted AI at scale?

For decades, Moore’s Law has been used to define technology leaps. Coined by Intel co-founder Gordon Moore in 1965, the law originally referred to the number of transistors on an integrated circuit.

It stated that the number doubles approximately every two years, leading to a corresponding increase in computing power and decrease in cost. It was not a scientific law, but rather a projection for the innovation in the semiconductor industry for decades.

Eventually, Moore’s Law became a metaphor for the rapid pace of technological advancement in general, not just within hardware. This broader application extended to software development and other areas, where product cycles were sometimes measured in relation to the pace of Moore's Law.

And then came AI.

AI is not only defying Moore’s Law—it is stress-testing it. We may soon need an entirely new “law” on how to measure technological progress. Not only in terms of exponential compute, memory, and efficiency, as demanded by AI models, but also in terms of how, we as humans, can keep up with the accelerating wave of new paradigms, terminology, and innovation unfolding around us.

Case in point: AI Factories

While this term initially appeared academically, it gained traction when Jensen Huang, the CEO of NVIDIA, used it to describe data centers or specialized compute environments built specifically for AI workloads. And suddenly it seems everyone is talking about, announcing products, or drafting articles on AI Factories. Yet, there are a vast majority of folks who are new to the term.

Another case in point: Confidential AI

Confidential AI built on Confidential Computing, the technology that protects data in use by performing computations in a secure, isolated environment, known as a Trusted Execution Environment (TEE). Confidential Computing prevents unauthorized access even while data is being processed, or in use, thus providing greater assurance of data privacy and security for enterprises. Confidential AI extends the core principles of Confidential Computing to secure the entire AI lifecycle, protecting proprietary models, training data, and inference requests from cloud providers and even system admins.

As AI adoption grows and sensitive data and models move into production, understanding AI Factories and Confidential AI is essential. The purpose of this blog is to dive further into those not so familiar topics and discuss how this enables organizations to secure data and AI models, pave the way for trust, and enforce sovereignty without slowing innovation.

What is an AI Factory?

An AI Factory refers to a full-stack AI infrastructure deployment — including accelerators (GPUs or AI chips), networking, storage, and services — that is installed inside an organization’s existing or newly planned on-premises locations, effectively turning that facility into a dedicated environment for building, training, and running AI at scale.

What is the reason for creating AI Factories?

Running AI workloads is not a small feat. Training and running AI models require extreme compute density, high-bandwidth networking, massive parallel processing, and power demands. Running AI in traditional data centers is not optimal because they are not designed to handle the scale, efficiency, and continuous optimization. Thus, AI Factories were created to deliver purpose-built infrastructure optimized specifically for large-scale, accelerated AI workloads.

What kinds of hardware and services are included in an AI Factory?

This is an extensive answer, which requires its own FAQ. But in a nutshell, AI Factories require specialized hardware with GPUs, TPUs, or other specialized AI chips. They also must have high-performance networking for dense parallel compute and low-latency interconnects.

AI lives on data, so data storage, integration, ingestions, streaming, and processing solutions are critical for model training and inferences. Then there is the orchestration and services management layer that automates containerized workloads and all sorts of service dependencies, scheduling, health checks, GPU resource sharing across containers, VMs, and hosts, and so on.

Of course, all this needs governance and monitoring, because observability and insights into logs, metrics, access controls, and so on is critical to ensure data and AI security, compliance, and alignment with regulations. Lastly, all this is powered by AI software and frameworks that handle the training, optimization, execution of jobs, and so on.

Easy, right?

What about security in AI Factories?

Now this is another great question, which also requires its own FAQ. A comprehensive AI Factory security architecture requires platforms to protect the infrastructure, workloads, and networks, which along with real-time threat detection that continuously monitors for malicious activity, data poisoning, and model tampering across the AI infrastructure, can secure the complex AI Factory environment.

When it comes to data and the proprietary AI models, where the cyber steaks are highest, Confidential AI—the use confidential computing to protect all stages of AI, including training and inference, by isolating the AI workloads in hardware-based secure environments called Trusted Execution Environments (TEEs), is the only way to:

- Protect data in use—not just at rest or in transit—ensuring sensitive information secured even while being processed.

- Secure the AI models—weights, parameters, biases-- from unauthorized access, extraction, tampering, fine-tuning, even by privileged administrators.

Is Confidential Computing and Confidential AI the same?

Confidential computing is foundational technology. It is secure enclaves that isolate sensitive workloads and data from the host system, including the operating system and hypervisor. It supports attestation to cryptographically verify that trusted code is running in a secure environment as expected, before sensitive data or keys are released to the enclave.

Confidential Computing requires robust key protection mechanisms, such as secure key release via a Hardware Security Module, to ensure encryption keys are provisioned securely and are only accessible inside the secure enclave at runtime.

Confidential AI is a specific application of Confidential Computing that secures the AI components-- data, model weights, prompts, embeddings, AI's logic-- throughout the full life cycle, including training, fine-tuning, and inference. Confidential AI helps address the biggest risk and barriers to AI adoption: model theft, model poisoning , data leakage, and compliance with data sovereignty and regulatory requirements.

Is Confidential Computing enough to run AI workloads in a secure environment?

While Confidential Computing technology ensures data and models remain secured even while in use, it alone is not sufficient. Teams need to verify that both CPUs and GPUs are operating in confidential modes before allowing access to sensitive data and models. Without unified verification, there should be no safe way to release encryption keys, secrets, or proprietary models to the workload.

How can it be verified that workloads are running in a Confidential Computing Environment?

Verification that workloads are running in a Confidential Computing environment is possible through cryptographic attestation. The workload attestation needs to be composite, meaning both the CPU and the GPU need to be verified as a single, unified trust decision.

- CPU attestation verifies that the host system is running in a Trusted Execution Environment, such as a confidential VM or enclave, with the correct firmware, boot chain, and security configuration.

- GPU attestation confirms that the attached accelerator is operating in a confidential or isolated mode, with verified firmware and protected memory, ensuring that model weights and data processed on the GPU cannot be accessed by the host or other workloads.

Composite attestation cryptographically binds these proofs together and is the only way to ensure that both compute domains are trusted and correctly configured before the workload starts. Only when the combined attestation measurements match, approved security policies are secrets, encryption keys, or AI models released to the workload.

How do Hardware Security Modules (HSMs) and Key Management Systems (KMS) relate to Confidential AI?

Hardware Security Model and Encryption Key Management Systems are critical to the security of AI workloads running in a confidential compute environment. HSMs generate and protect encryption keys in tamper-resistant hardware, while KMS centrally manages key access and policies.

The confidential compute environment ensures keys are never exposed to the OS, hypervisor, or rogue administrators, enabling encrypted data to be decrypted and processed securely inside trusted execution environments. Thus, Confidential AI depends on cryptographic posture management.

Can you elaborate on the threats that Confidential AI protects against?

Confidential AI closes the security and exposure of data and models gap by processing AI workloads inside a Trusted Execution Environment. Thus, Confidential AI mitigates the risk of:

- "Rogue Admin" Attacks: It blocks anyone with root/admin privileges on the host server (including your own sysadmins or a cloud provider's staff) from dumping the memory of the VM to steal proprietary IP.

- Exposure of data in use: In a standard IT environment and AI data center, data is encrypted at rest (disk) and in transit (network), but it is vulnerable while in use (in memory/VRAM). Processing the data in TEE protects data in memory or commonly referred to as data in use.

- Model Theft/IP Protection: This is often the biggest driver for AI Factories built on Confidential Computing. The Trusted Execution Environment prevents malicious actors from stealing proprietary model weights while they are loaded onto the GPUs for training or inference.

- Against Hypervisor Compromise: If a hacker exploits a vulnerability in the virtualization layer (e.g., KVM, VMware), they still cannot read the encrypted memory of your workloads—traditional and AI ones.

- Data Poisoning & Integrity: It ensures that the code and data being processed haven't been tampered with. Remember, composite attestation for the CPU and GPU proves, does not assume, sensitive data and AI workloads are protected. So, through composite attestation, you can cryptographically verify that the specific signed version of your training software is running before you release the decryption keys for your data.

What benefits do AI Factories offer over traditional cloud setups?

AI Factories deliver robust data and model security, regulatory compliance, and exceptional performance at scale. By operating in environments purpose-built and optimized for AI, organizations can achieve cloud-like training and inference performance within isolated, dedicated infrastructure they fully control.

When AI Factories leverage Confidential Computing to enable Confidential AI, governments and enterprises retain end-to-end control over the AI lifecycle. This enables them to safely drive innovation, economic growth, and strategic initiatives while meeting strict data sovereignty, privacy, and regulatory mandates. Through composite attestation, teams can prove that AI workloads are isolated and secure at runtime, so sensitive data and AI models remain protected—regardless of where the underlying infrastructure is deployed.

Who should consider using an AI Factory?

AI Factories are ideal for organizations that need to build, scale, and maintain AI solutions securely and efficiently, with utmost focus on data and model protection. This includes enterprises with strict data-privacy requirements, teams managing large or sensitive or mission-critical datasets, and companies looking to automate processes, improve model accuracy, or standardize AI development across their organization.

Who should consider Confidential AI?

Anyone who cares about the security, trust, and governance of their models and data. Even with best security practices and full IT security stack, vulnerability exists and breaches will happen. Confidential AI is the only way to mitigate cyber risk and safely drive innovation.

What AI workloads or use-cases are well-suited for AI Factories?

AI Factories enable the training of large language models (LLMs), large-scale inference, and AI-powered applications for data-sensitive and regulated workloads across industries such as financial services, healthcare, and drug discovery.

They are also ideally suited for AI use cases that demand both high performance and stringent control over data residency, security, and compliance—including national AI infrastructure initiatives and national telecommunications providers.

What problems do AI Factories solve for organizations?

With a reference design guide, such as this one form NVIDIA for governments and regulated deployments, organizations can significantly reduce the time and complexity required to build AI infrastructure from scratch.

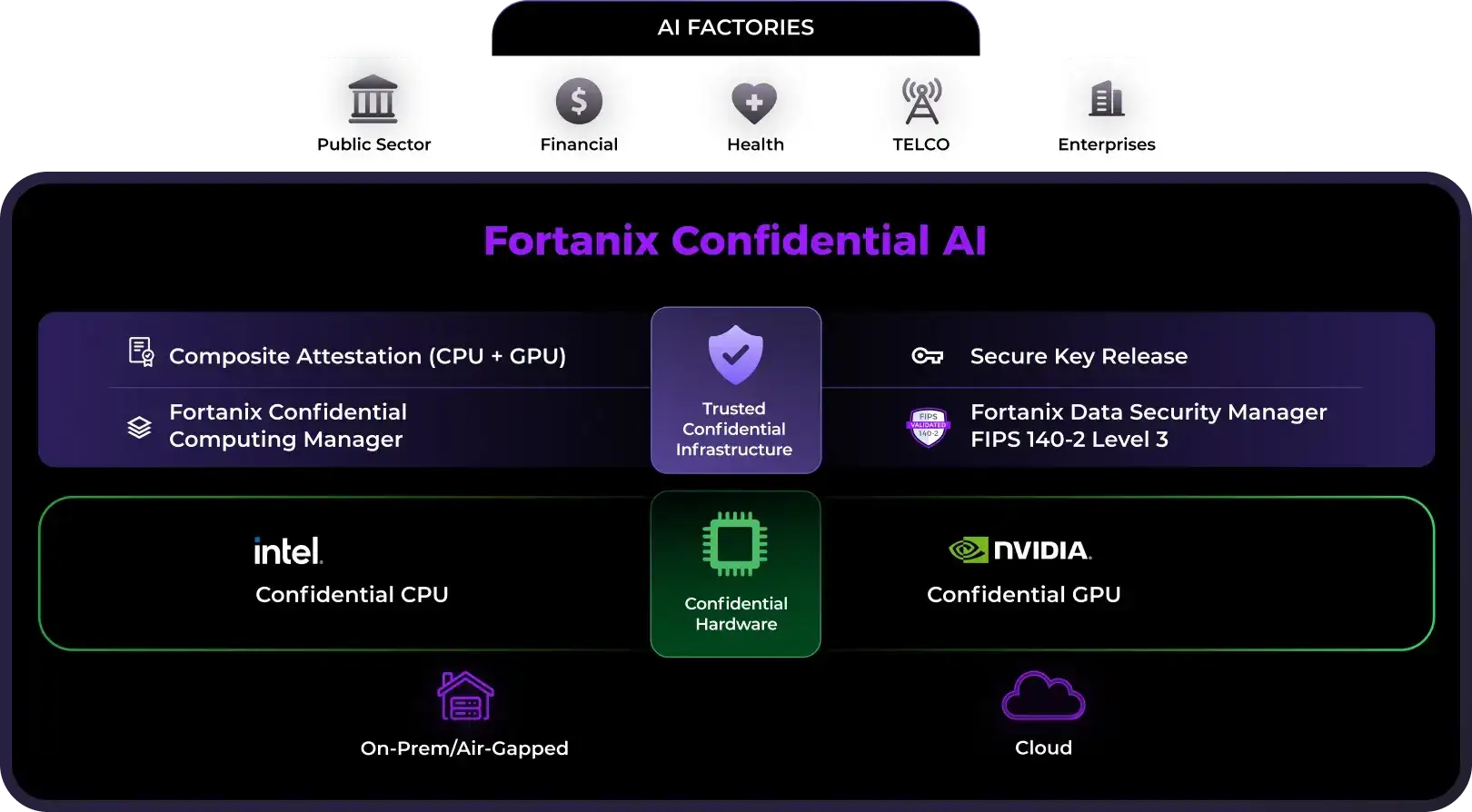

Is Fortanix part of the AI Factory stack?

Thought you’d never ask Fortanix Confidential AI gives governments and enterprises the ability to run sovereign and trusted AI with verified confidentiality, compliance, and control inside their AI Factories or neoclouds.

Built to run on NVIDIA Hopper and NVIDIA Blackwell confidential computing GPUs, Fortanix provides AI teams with a secure foundation for training LLMs and deploying AI agents on sensitive data.

Backed by composite attestation, for both the CPUs and GPU, as well as secure key release from the Fortanix Data Security Manager (DSM), also built on confidential computing, organizations can ensure their data, prompts, AI agents, and models remain protected even while in use, all while meeting strict regulatory and compliance requirements.

What value does Fortnanix Confidential AI deliver to enterprises?

Fortanix Confidential AI provides organizations with a proven path to innovation—placing data protection, model security, trust, and sovereignty at the core of AI operations.

For many enterprises, preventing the exposure of sensitive data, safeguarding AI models, and ensuring systems remain untampered have been major barriers to AI adoption—particularly for entities with strict data sovereignty and regulatory compliance requirements.

Confidential Computing serves as the foundational technology that removes these barriers, enabling organizations to run Confidential AI at scale while maintaining control and compliance.

While Trusted Execution Environments have existed for years, their application to AI remains new for many teams—making a turnkey, operationalized approach essential.

About Fortanix

Fortanix is the global leader in data-first cybersecurity and a pioneer of Confidential Computing. Its unified platform secures sensitive data across on-premises and multi-cloud environments—at rest, in transit, and in use—through advanced encryption and key management.

Fortanix’s encryption is resistant to all known cryptanalytic techniques, including the latest quantum computing algorithms, allowing for top-level compliance and operational simplicity while reducing risk and cost.

Trusted by leading enterprises and government agencies, Fortanix enables users to run applications and AI workloads entirely within secure hardware enclaves—isolated, tamper proof environments.

As enterprises build modern AI factories, Fortanix provides the confidential AI foundation that protects data, models, and pipelines throughout the full AI lifecycle. This innovative approach, an industry standard known as Confidential Computing, has been supported by leading technology companies, including Intel, Microsoft, and NVIDIA.